DISTRIBUTION: INITIATED BY:

http://www.directives.doe.gov Office of Environment, Safety and Health

DOE G 414.1-4

Approved 6-17-05

Certified 11-3-10

SAFETY SOFTWARE GUIDE

for USE with

10 CFR 830 Subpart A, Quality Assurance

Requirements, and DOE O 414.1C, Quality Assurance

[This Guide describes suggested nonmandatory approaches for meeting requirements.

Guides are not requirements documents and are not construed as requirements in any

audit or appraisal for compliance with the parent Policy, Order, Notice, or Manual.]

U.S. DEPARTMENT OF ENERGY

Washington, D.C.

NOT MEASUREMENT

SENSITIVE

DOE G 414.1-4 i (and ii)

6-17-05

FOREWORD

This Department of Energy (DOE) Guide is approved by the Office of Environment, Safety and

Health and is available for use by all DOE and National Nuclear Security Administration

(NNSA) elements and their contractors. This Guide revises and supersedes earlier guidance

identified in Appendix B to include new and updated information.

Comments, including recommendations for additions, modifications, or deletions, and other

pertinent information, should be sent to the following.

Gustave E. Danielson, Jr. Richard H. Lagdon, Jr. (Chip)

U.S. DOE U.S DOE

Office of Quality Assurance Programs Director, Office of Quality Assurance Programs

1000 Independence Avenue SW 1000 Independence Avenue SW

EH-31/270CC EH-31/270CC

Washington, DC 20585-0270 Washington, DC 20585-0270

Phone: 301-903-2954 Phone: 301-903-4218

Fax: 301-903-4120 Fax: 301-903-4120

E-mail: [email protected] E-mail: [email protected]

Guides are part of the DOE directives system and are used to provide supplemental information

regarding DOE/NNSA expectations for fulfilling requirements contained in Policies, Rules,

Orders, Manuals, Notices, and Regulatory Standards. Guides are also used to identify

Government and non-Government standards and acceptable methods for implementing

DOE/NNSA requirements. Guides are not substitutes for requirements nor do they introduce new

requirements or replace technical standards used to describe established practices and

procedures.

DOE G 414.1-4 iii

6-17-05

CONTENTS

BACKGROUND .............................................................................................................................v

1. INTRODUCTION ...............................................................................................................1

1.1 Purpose.....................................................................................................................1

1.2 Scope........................................................................................................................1

1.3 Responsibility for Safety Software..........................................................................3

1.4 Safety Software Quality Assurance .........................................................................3

1.5 Software Quality Assurance Program......................................................................3

2. SAFETY SOFTWARE TYPES AND GRADING..............................................................5

2.1 Software Types ........................................................................................................5

2.2 Graded Application..................................................................................................6

3. GENERAL INFORMATION..............................................................................................8

3.1 System Quality and Safety Software .......................................................................8

3.2 Risk and Safety Software.........................................................................................9

3.3 Special-Purpose Software Applications.................................................................10

3.3.1 Toolbox and Toolbox-Equivalent Software Applications........................10

3.3.2 Existing Safety Software Applications.....................................................11

3.4 Continuous Improvement, Measurement, and Metrics..........................................11

3.5 Use of National/International Standards................................................................12

4. RECOMMENDED PROCESS..........................................................................................13

5. GUIDANCE.......................................................................................................................13

5.1 Software Safety Design Methods...........................................................................13

5.2 Software Work Activities ......................................................................................17

5.2.1 Software Project Management and Quality Planning.............................17

5.2.2 Software Risk Management.....................................................................19

5.2.3 Software Configuration Management .....................................................21

5.2.4 Procurement and Supplier Management.................................................22

5.2.5 Software Requirements Identification and Management ........................23

5.2.6 Software Design and Implementation .....................................................24

5.2.7 Software Safety........................................................................................25

5.2.8 Verification and Validation.....................................................................27

iv DOE G 414.1-4

6-17-05

CONTENTS (continued)

5.2.9 Problem Reporting and Corrective Action..............................................30

5.2.10 Training Personnel in the Design, Development, Use, and

Evaluation of Safety Software .................................................................30

6. ASSESSMENT AND OVERSIGHT.................................................................................31

6.1 General...................................................................................................................31

6.2 DOE and Contractor Assessment...........................................................................32

6.3 DOE Independent Oversight..................................................................................32

APPENDIX A. ACRONYMS AND DEFINTIONS.............................................................. A-1

APPENDIX B. PROCEDURE FOR ADDING OR REVISING SOFTWARE TO

OR DELETING SOFTWARE FROM THE DOE SAFETY

SOFTWARE CENTRAL REGISTRY..........................................................B-1

APPENDIX C. USE OF ASME NQA-1-2000 AND SUPPORTING STANDARDS

FOR COMPLIANCE WITH DOE 10 CFR 830 SUBPART A

AND DOE O 414.1C AND SAFETY SOFTWARE.....................................C-1

APPENDIX D. QUALITY ASSURANCE STANDARDS FOR SAFETY

SOFTWARE IN DEPARTMENT OF ENERGY NUCLEAR

FACILITIES ................................................................................................. D-1

APPENDIX E. SAFETY SOFTWARE ANALYSIS AND MANAGEMENT

PROCESS ......................................................................................................E-1

APPENDIX F. DOE O 414.1C CRITERIA REVIEW AND APPROACH

DOCUMENT.................................................................................................F-1

APPENDIX G. REFERENCES ............................................................................................. G-1

DOE G 414.1-4 v (and vi)

6-17-05

BACKGROUND

The use of digital computers and programmable electronic logic systems has increased

significantly since 1995, and their use is evident in safety applications at nuclear facilities across

the Department of Energy (DOE or Department) complex. The commercial industry has

increased attention to quality assurance of safety software to ensure that safety systems and

structures are properly designed and operate correctly. Recent DOE experience with safety

software has led to increased attention to the safety-related decision making process, the quality

of the software used to design or develop safety-related controls, and the proficiency of

personnel using the safety software.

The Department has recognized the need to establish rigorous and effective requirements for the

application of quality assurance (QA) programs to safety software. In evaluating Defense

Nuclear Facilities Safety Board (DNFSB) recommendation 2002-1 and through assessing the

current state of safety software, the Department concluded that an integrated and effective

Software Quality Assurance (SQA) infrastructure must be in place throughout the Department’s

nuclear facilities. This is being accomplished through the Implementation Plan for Defense

Nuclear Facilities Safety Board Recommendation 2002-1, Quality Assurance for Safety Software

at Department of Energy Defense Nuclear Facilities.

To ensure the quality and integrity of safety software, DOE directives are being developed and

revised based on existing SQA industry or Federal agency standards This resulted in the

development and issuance of DOE O 414.1C, Quality Assurance, dated 6-17-05, which includes

specific SQA requirements, this Guide and the DOE Standard 1172-2003, Safety Software

Quality Assurance Functional Area Qualification Standard, dated December 2003. The SQA

requirements are to be implemented by DOE and its contractors. Nuclear facility contractors

must implement the SQA requirements under their QA program for 10 CFR 830, Subpart A,

Quality Assurance Requirements. Thus, the intent of this Guide is to provide instructional

guidance for application of DOE O 414.1C safety software requirements.

DOE G 414.1-4 1

6-17-05

1. INTRODUCTION

1.1 PURPOSE

This Department of Energy (DOE or Department) Guide provides information plus acceptable

methods for implementing the safety software quality assurance (SQA) requirements of DOE

O 414.1C, Quality Assurance, dated 6-17-05. DOE O 414.1C requirements supplement the

quality assurance program (QAP) requirements of Title 10 Code of Federal Regulations

(CFR) 830, Subpart A, Quality Assurance, for DOE nuclear facilities and activities. The safety

SQA requirements for DOE, including the National Nuclear Security Administration (NNSA),

and its contractors are necessary to implement effective quality assurance (QA) processes and

achieve safe nuclear facility operations.

DOE promulgated the safety software requirements and this guidance to control or eliminate the

hazards and associated postulated accidents posed by nuclear operations, including radiological

operations. Safety software failures or unintended output can lead to unexpected system or

equipment failures and undue risks to the DOE/NNSA mission, the environment, the public, and

the workers. Thus DOE G 414.1-4 has been developed to provide guidance on establishing and

implementing effective QA processes tied specifically to nuclear facility safety software

applications. DOE also has guidance

1

for the overarching QA program, which includes safety

software within its scope. This Guide includes software application practices covered by

appropriate national and international consensus standards and various processes currently in use

at DOE facilities.

2

This guidance is also considered to be of sufficient rigor and depth to ensure

acceptable reliability of safety software at DOE nuclear facilities.

This guidance should be used by organizations to help determine and support the steps necessary

to address possible design or functional implementation deficiencies that might exist and to

reduce operational hazards-related risks to an acceptable level. Attributes such as the facility

life-cycle stage and the hazardous nature of each facility’s operations should be considered when

using this Guide. Alternative methods to those described in this Guide may be used provided

they result in compliance with the requirements of 10 CFR 830 Subpart A and DOE O 414.1C.

Another objective of this guidance is to encourage robust software quality methods to enable the

development of high quality safety applications.

1.2 SCOPE

This Guide is intended for use by all DOE/NNSA organizations and their contractors to assist in

developing site and facility specific safety SQA processes and procedures compliant with

10 CFR 830 Subpart A and DOE O 414.1C.

The Department’s objectives for safety software requirements include—

1

DOE G 414.1-2, Quality Assurance Management System Guide for use with 10 CFR 830.120 and DOE O 414.1,

dated 6-17-99.

2

See Appendix G for list of consensus standards.

2 DOE G 414.1-4

6-17-05

• grading SQA requirements based on risk, safety, facility life-cycle, complexity, and

project quality requirements;

• applying SQA requirements to software life-cycle phases;

• developing procurement controls for acquisition of computer software and hardware that

are provided with supplier-developed software and/or firmware;

• documenting and tracking customer requirements;

• managing software configuration throughout the life-cycle phases;

• performing verification and validation (V&V)

3

processes;

• performing reviews of software configuration items, including reviewing the safety

implications identified in the failure analysis and fault tolerance design; and

• training personnel who use and apply software in safety applications.

The scope of this Guide includes software applications that meet safety software definitions as

stated in DOE O 414.1C. This includes software applications important to safety that may be

included or associated with structures, systems, or components (SSCs) for less than hazard

category 3 facilities. Safety Software includes safety system software, safety and hazard analysis

software and design software, and safety management and administrative control software.

Safety system software is software for a nuclear facility

4

that performs a safety function as part of

an SSC and is cited in either (1) a DOE approved documented safety analysis or (2) an approved

hazard analysis per DOE P 450.4 Safety Management System Policy, dated 10-15-96, and the

DEAR clause.

Safety and hazard analysis software and design software is software that is used to classify,

design, or analyze nuclear facilities. This software is not part of an SSC but helps to ensure the

proper accident or hazards analysis of nuclear facilities or an SSC that performs a safety

function.

Safety management and administrative controls software is software that performs a hazard

control function in support of nuclear facility or radiological safety management programs or

Technical Safety Requirements or other software that performs a control function necessary to

provide adequate protection from nuclear facility or radiological hazards. This software supports

eliminating, limiting, or mitigating nuclear hazards to workers, the public, or the environment as

addressed in 10 CFR 830, 10 CFR 835, and the DEAR ISMS clause.

Additional definitions are included in Appendix A, Acronyms and Definitions.

3

Verification and validation in this Guide includes ASME’s NQA-1 terms design verification and acceptance

testing.

4

Per 10 CFR 830, quality assurance requirements apply to all DOE nuclear facilities including radiological facilities

(see 10 CFR 830, DOE Std 1120, and the DEAR clause).

DOE G 414.1-4 3

6-17-05

Although this Guide has been developed for DOE nuclear facility software, it may also be useful

for ensuring the quality of other software important to mission critical functions, environmental

protection, health and safety protection, safeguards and security, emergency management, or

assets protection.

1.3 RESPONSIBILITY FOR SAFETY SOFTWARE

The Assistant Secretary for Environment, Safety and Health has the lead responsibility for

promulgating requirements and guidance through the directives system for safety software per

DOE O 414.1C. The organizations that use software should determine whether to qualify the

software for safety applications. Organizations should coordinate SQA procedures with their

respective Chief Information Officers and other appropriate organizations. DOE line

organizations are responsible for providing direction and oversight of the contractor

implementation of SQA requirements.

1.4 SAFETY SOFTWARE QUALITY ASSURANCE

The scope of the Department’s QA Rule, 10 CFR 830 Subpart A, is stated as “This subpart

establishes quality assurance requirements for contractors conducting activities, including

providing items or services, that affect, or may affect, nuclear safety of DOE nuclear facilities.”

The scope of the QA Rule encompasses the contractor’s conduct of activities as they relate to

safety software (items or services). Therefore the contractor’s QAP includes safety software

within its scope. DOE O 414.1C establishes the safety software QA requirements to be

implemented under the Rule. 10 CFR 830 Subpart A and DOE O 414.1C require contractors to

perform safety software work in accordance with the applicable criteria.

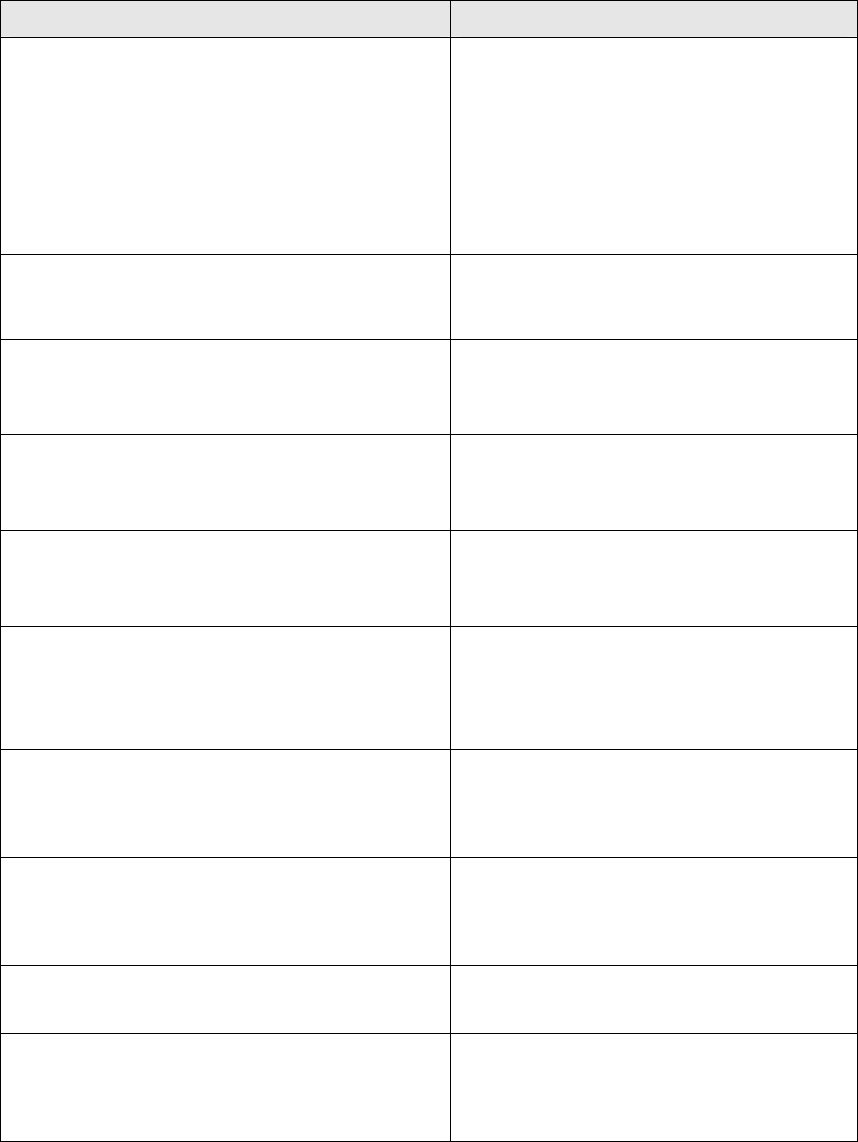

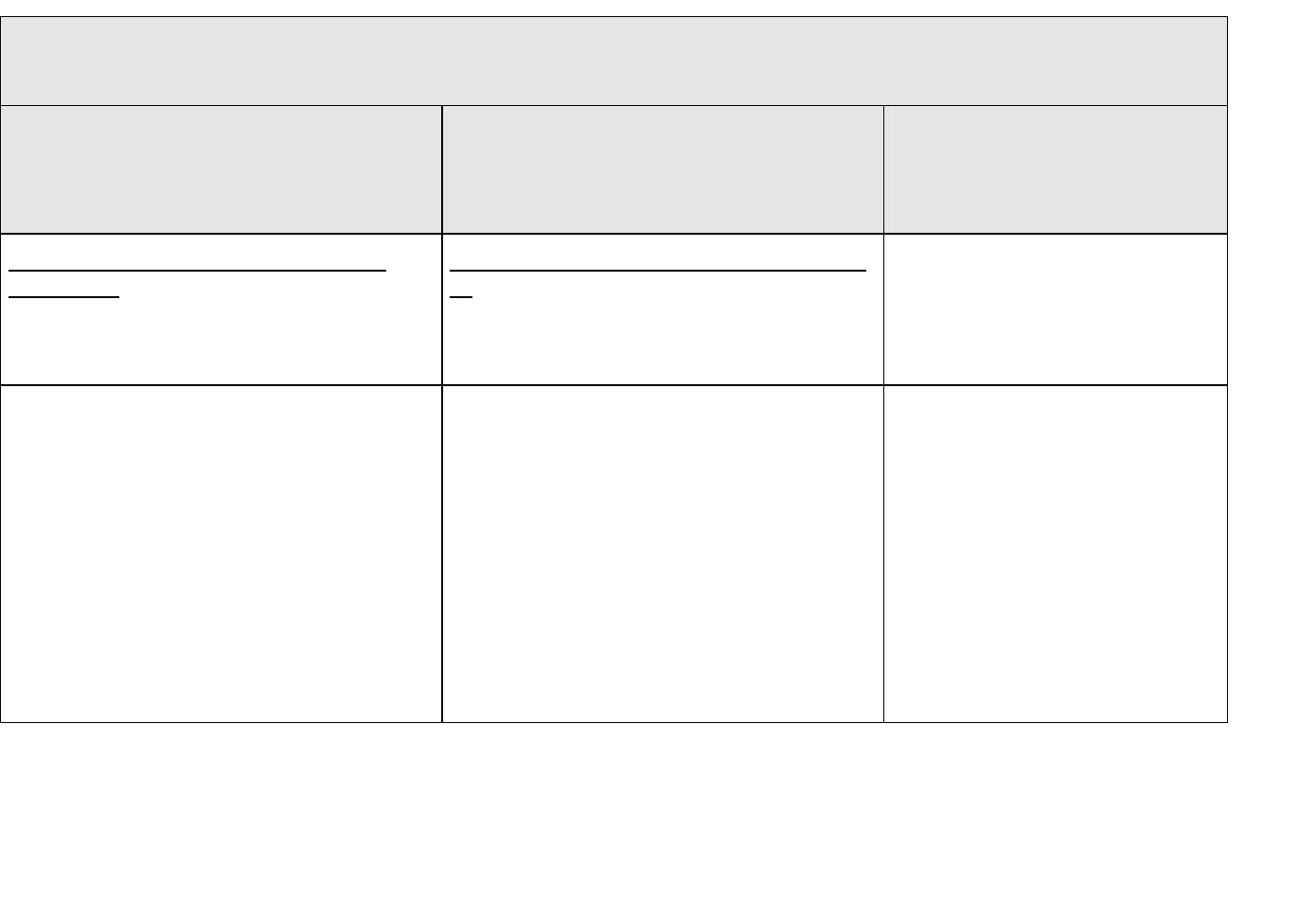

The various sections of this Guide discuss the application of the QA criteria from DOE O 414.1C

and 10 CFR 830 Subpart A to the ten SQA work activities. Table 1 provides an illustration of

how the SQA work activities satisfy the QA criteria.

1.5 SOFTWARE QUALITY ASSURANCE PROGRAM

It is important that SQA is part of an overall QAP required for nuclear facilities in accordance

with 10 CFR 830 Subpart A and DOE O 414.1C. Regardless of the application of the software,

an appropriate level of quality infrastructure should be established and a commitment made to

maintain this infrastructure for the safety software.

An SQA program establishes the appropriate safety software life-cycle practices, including

safety design concepts, to ensure that software functions reliably and correctly performs the

intended work specified for that safety software. In other words, SQA’s role is to minimize or

prevent the failure of safety software and any undesired consequences of that failure. The rigor

imposed by SQA is driven by the intended use of the safety software. More importantly, the rigor

of SQA should address the risk of use of such software. Effective safety software quality is one

method for avoiding, minimizing, or mitigating the risk associated with the use of the software.

4 DOE G 414.1-4

6-17-05

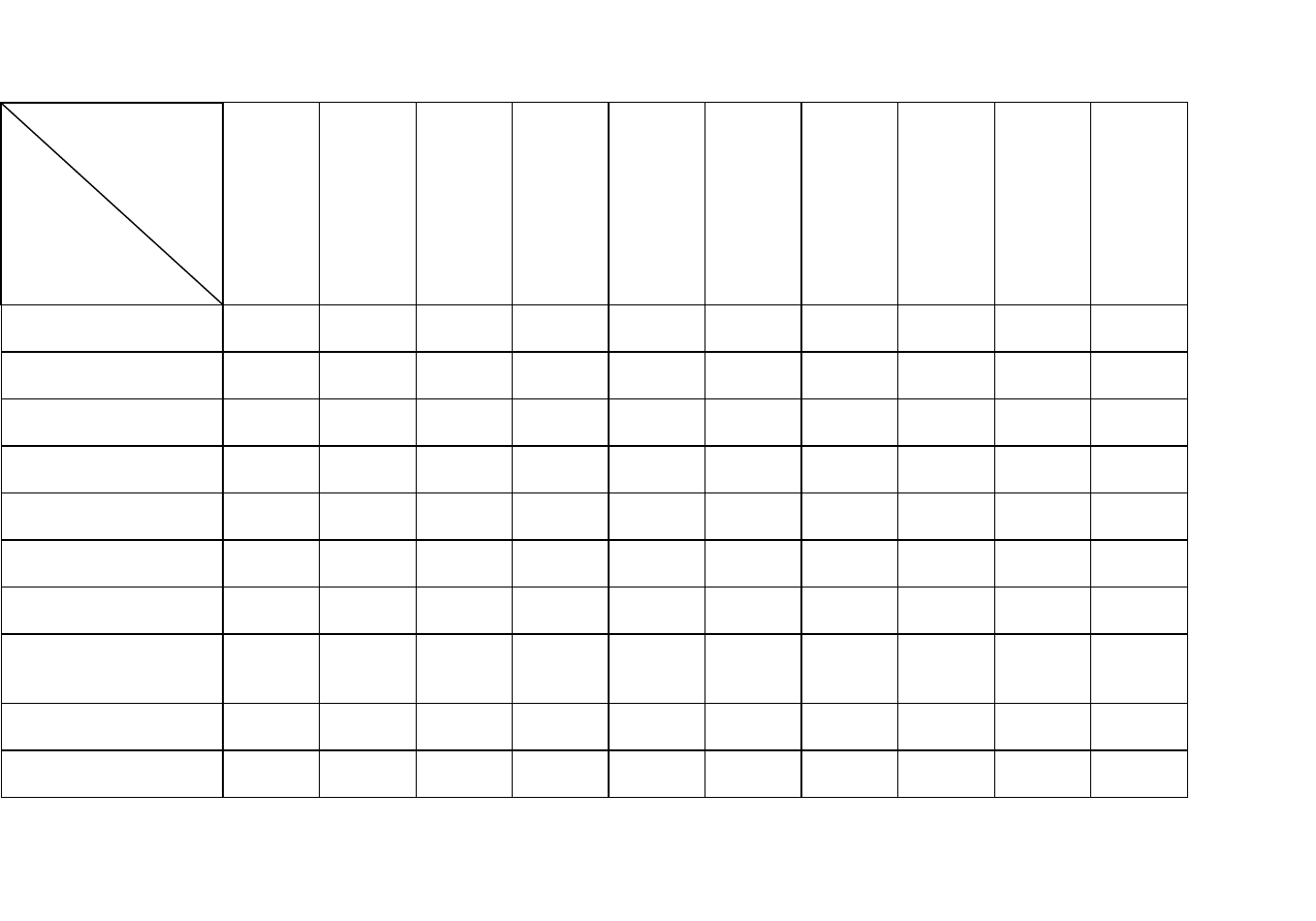

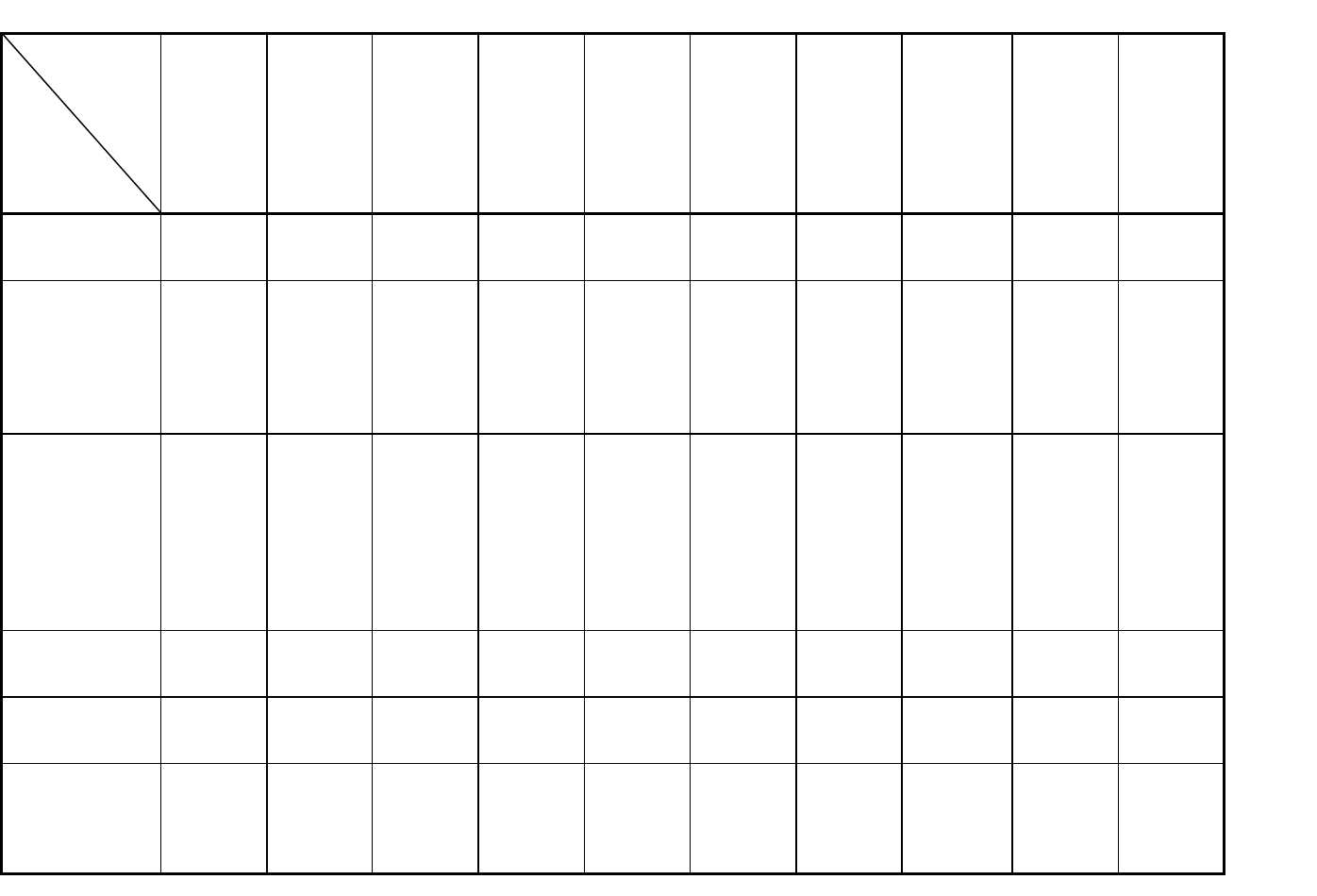

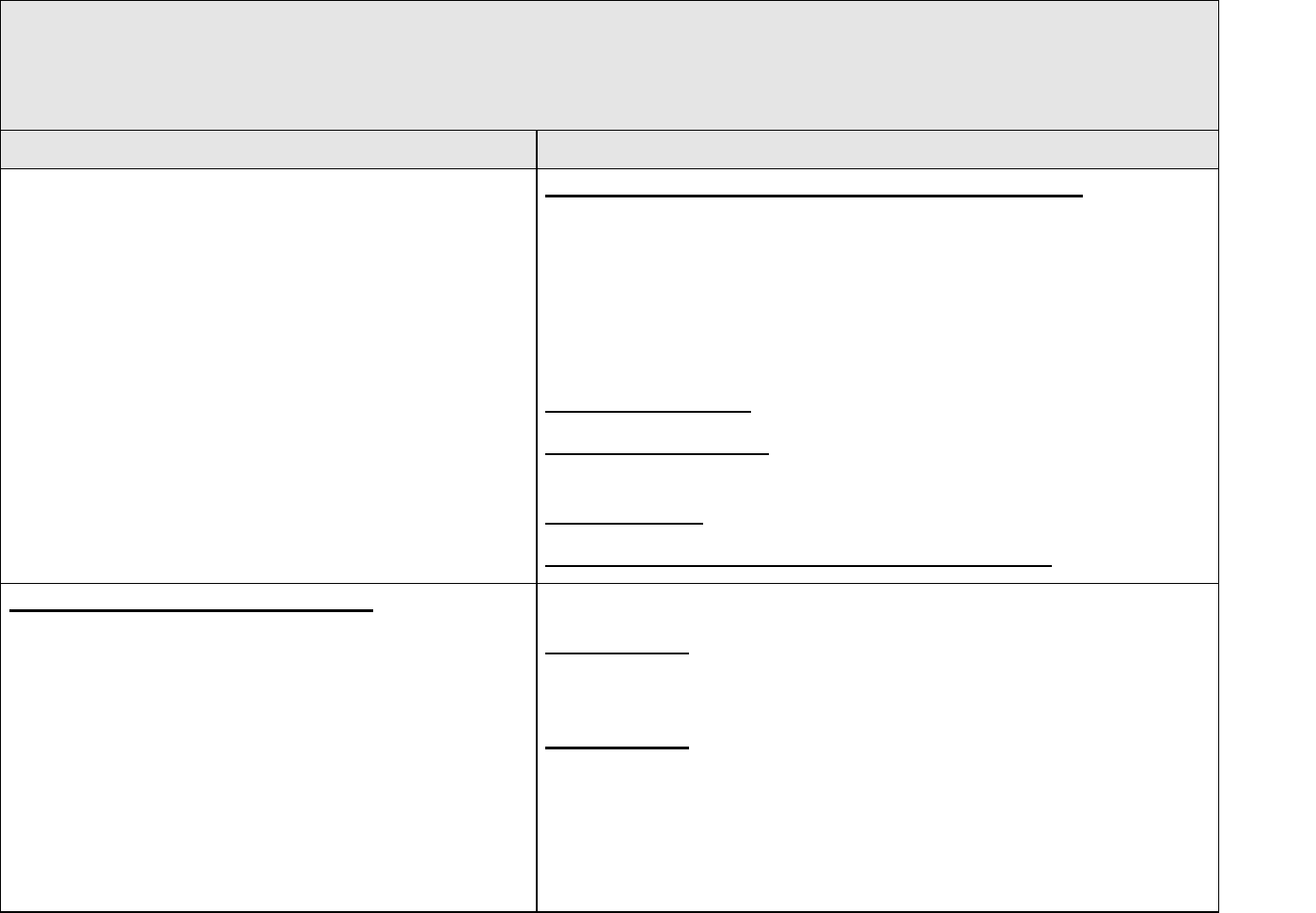

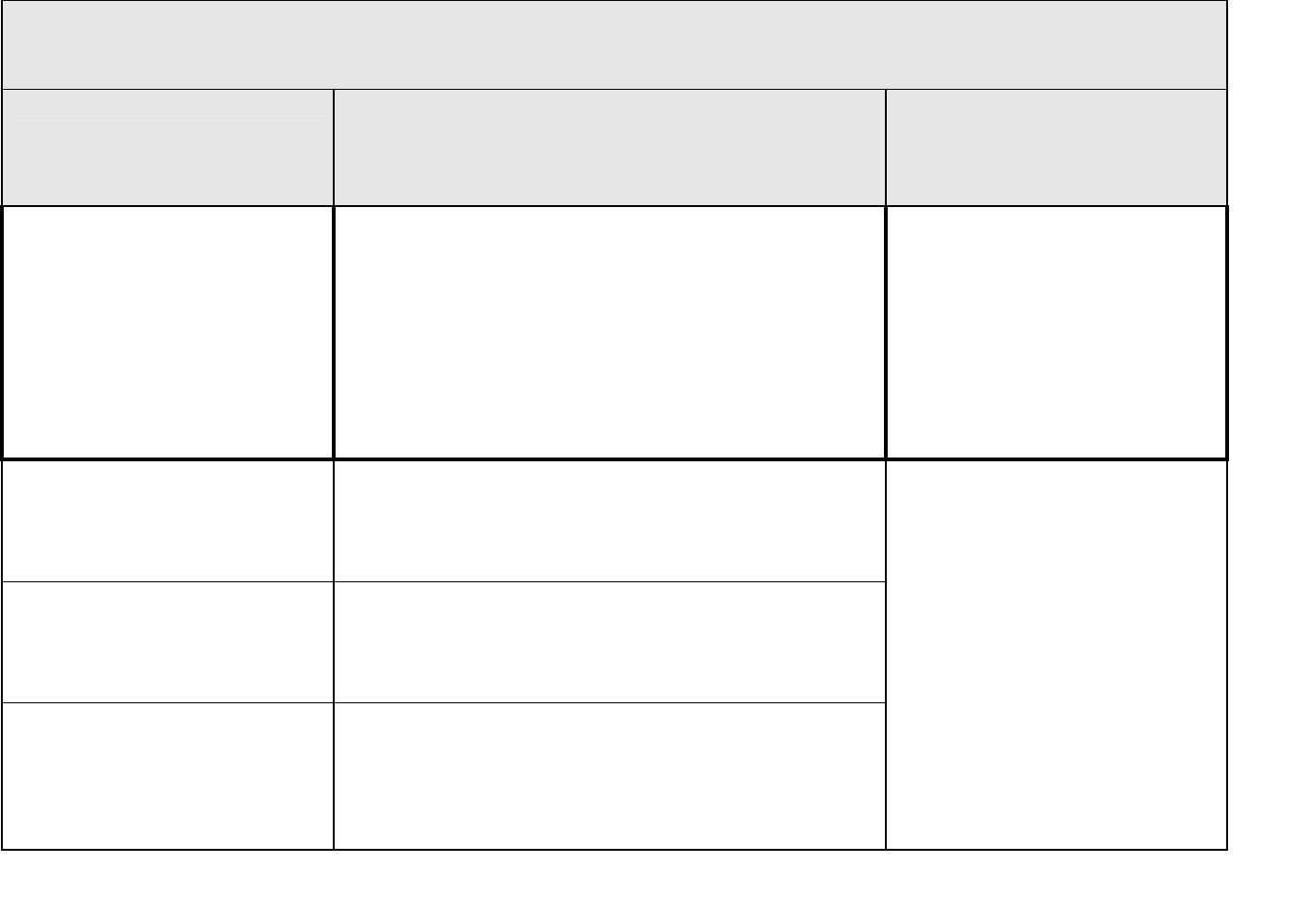

Table 1. An Illustration of Quality Assurance (QA) Criteria (10 CFR 830 Subpart A & DOE O 414.1C)

Applicability to Software Quality Assurance (SQA) Work Activities

SQA Work

Activities

10 CFR 830

QA Criteria and

DOE O 414.1C

Software (sw) project

management and

quality planning

Sw risk mgmt

Sw configuration

mgmt

Procurement &

supplier mgmt

Sw rqmts

identification &

mgmt

Sw design &

implementation

Sw safety

Verification &

validation

Prblm rpting &

corrective action

Training of … safety

sw

Program X X X X X X X X X X

Training & Qualific ation X

Quality Improvement X X

Documents and Records X X X X X X X X X X

Work Processes X X X X X X X X X X

Design X X X X

Procure ment X

Inspection & Acceptance

Testing

X

Management Assessment X X X X X X X X X

Independent Assessment X X X X X X X X X X

Note: This table is only an illustration of QA criteria applicability. Actual application will be described in the organization’s QA program

and safety software work process documents. For example, an independent assessment may be performed on any safety software quality

element.

DOE G 414.1-4 5

6-17-05

The goal of SQA for safety system software is to apply the appropriate quality practices to

ensure the software performs its intended function and to mitigate the risk of failure of safety

systems to acceptable and manageable levels. SQA practices are defined in national and

international consensus standards. SQA cannot address the risks created by the failure of other

system components (hardware, data, human process, power system failures) but can address the

software “reaction” to effects caused by these types of failures. SQA should not be isolated from

system level QA and other system level activities. In many instances, hardware fail-safe methods

are implemented to mitigate risk of safety software failure. Additionally other interfaces such as

hardware and human interfaces with safety software should implement QA activities.

2. SAFETY SOFTWARE TYPES AND GRADING

2.1 SOFTWARE TYPES

Software typically is either custom developed or acquired software. Further characterizing these

two basic types aids in the selection of the applicable practices and approaches for the SQA work

activities. For the purposes of this Guide, five types of software have been identified as

commonly used in DOE applications: (1) custom developed, (2) configurable, (3) acquired,

(4) utility calculation, and (5) commercial design and analysis.

Developed and acquired software types as discussed in American Society of Mechanical

Engineers (ASME) NQA-1-2000, Quality Assurance Requirements for Nuclear Facility

Applications are compatible with these five software types. Developed software as described in

ASME NQA-1-2000 is directly associated with custom developed, configurable, and utility

calculation software. Acquired software included in this Guide is easily mapped to that of

acquired software in ASME NQA-1-2000. ASME NQA-1-2000 uses acquired and procured

software terms interchangeably.

5

This Guide includes an additional software type of commercial

design and analysis software that is not directly related to either developed or acquired software.

Safety software quality requirements can only be specified through work activities described in

contractual agreements with the supplier of the facility design and analysis services.

Custom developed software is built specifically for a DOE application or to support the same

function for a related government organization. It may be developed by DOE or one of its

management and operating (M&O) contractors or contracted with a qualified software company

through the procurement process. Examples of custom developed software includes material

inventory and tracking database applications, accident consequence applications, control system

applications, and embedded custom developed software that controls a hardware device.

Configurable software is commercially available software or firmware that allows the user to

modify the structure and functioning of the software in a limited way to suit user needs. An

example is software associated with PLCs.

5

ASME NQA-1-2000, Quality Assurance Requirements for Nuclear Facility Applications, Subpart 2.7 Section 300,

American Society of Mechanical Engineers, New York, New York, 2001, p. 105.

6 DOE G 414.1-4

6-17-05

Acquired software is generally supplied through basic procurements, two-party agreements, or

other contractual arrangements. Acquired software includes commercial off-the-shelf (COTS)

software, such as operating systems, database management systems, compilers, software

development tools, and commercial calculational software and spreadsheet tools (e.g., Mathsoft’s

MathCad and Microsoft’s Excel). Downloadable software that is available at no cost to the user

(referred to as freeware) is also considered acquired software. Firmware is acquired software.

Firmware is usually provided by a hardware supplier through the procurement process and

cannot be modified after receipt.

Utility calculation software typically uses COTS spreadsheet applications as a foundation and

user developed algorithms or data structures to create simple software products. The utility

calculation software within the scope of this document is used frequently to perform calculations

associated with the design of an SSC. Utility software that is used with high frequency may be

labeled as custom software and may justify the same safety SQA work activities as custom

developed software.

6

With utility calculation software, it is important to recognize the difference

between QA of the algorithms, macros, and logic that perform the calculations versus QA of the

COTS software itself. Utility calculation software includes the associated data sets, configuration

information, and test cases for validation and/or calibration.

Commercial design and analysis software is used in conjunction with design and analysis

services provided to DOE from a commercial contractor. An example would be where DOE or

an M&O contractor contracts for specified design services support. The design service provider

uses its independently developed or acquired software without DOE involvement or support.

DOE then receives a completed design. Procurement contracts can be enhanced to require that

the software used in the design or analysis services meet the requirements in DOE O 414.1C.

2.2 GRADED APPLICATION

Proper implementation of DOE O 414.1C will be enhanced by grading safety software based on

its application. Safety software grading levels should be described in terms of safety

consequence and regulatory compliance. This Guide utilizes the grading levels and the software

types (custom developed, configurable, acquired, utility calculations, and commercial design and

analysis tools) to recommend how the SQA work activities are applied. The grading levels are

defined as follows.

Level A: This grading level includes safety software applications that meet one or more of the

following criteria.

1. Software failure that could compromise a limiting condition for operation.

2. Software failure that could cause a reduction in the safety margin for a safety SSC that is

cited in DOE approved documented safety analysis.

3. Software failure that could cause a reduction in the safety margin for other systems such

as toxic or chemical protection systems that are cited in either (a) a DOE approved

6

ASME NQA-1-2000, op.cit., Part 4, Subpart 4.1, Section 101.1, p. 227.

DOE G 414.1-4 7

6-17-05

documented safety analysis or (b) an approved hazard analysis per DOE P 450.1 and the

DEAR ISMS clause.

4. Software failure that could result in nonconservative safety analysis, design, or

misclassification of facilities or SSCs.

Level B: This grading level includes safety software applications that do not meet Level A

criteria but meet one or more of the following criteria.

1. Safety management databases used to aid in decision making whose failure could impact

safety SSC operation.

2. Software failure that could result in incorrect analysis, design, monitoring, alarming, or

recording of hazardous exposures to workers or the public.

3. Software failure that could comprise the defense in depth capability for the nuclear

facility.

Level C: This grading level includes software applications that do not meet Level B criteria but

meet one or more of the following criteria.

1. Software failure that could cause a potential violation of regulatory permitting

requirements.

2. Software failure that could affect environment, safety, health monitoring or alarming

systems.

3. Software failure that could affect the safe operation of an SSC.

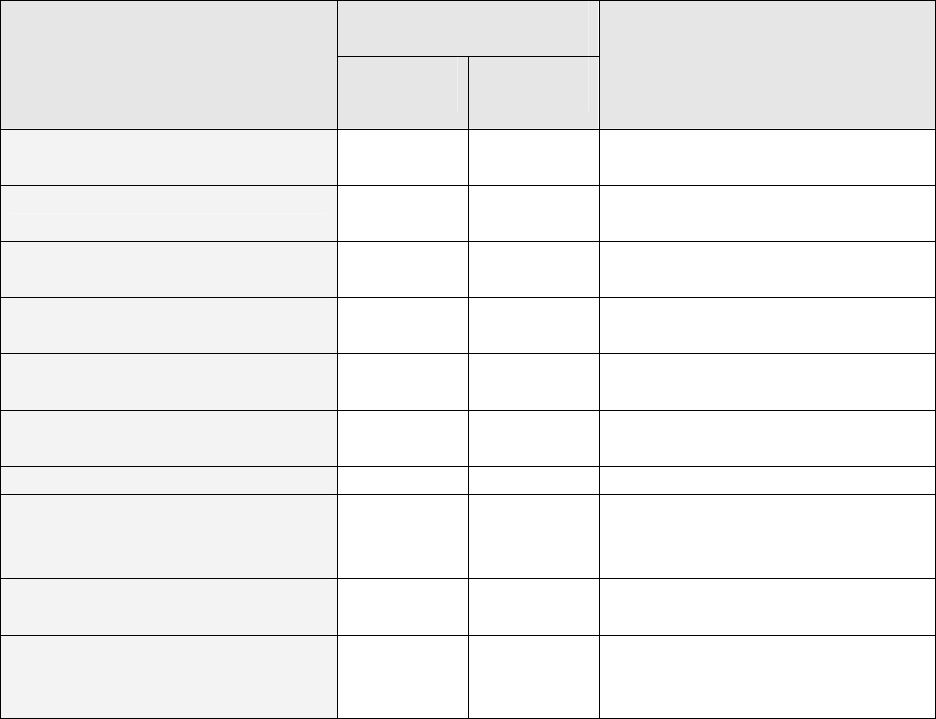

The grading level criteria should provide for a higher grade level for software in nuclear facilities

categorized as Category 1, 2 or 3 and the lower grading level for software in facilities

categorized as less than Category 3. Table 2 illustrates the association of grading criteria

described above to facility categorization.

Using the grading levels and the safety software types in Table 2, select and implement

applicable software quality work activities from the following list to ensure that safety software

performs its intended functions. DOE O 414.1C specifies that ASME NQA-1-2000, Quality

Assurance Requirements for Nuclear Facility Applications, or other national or international

consensus standards that provide an equivalent level of quality assurance requirements as ASME

NQA-1-2000 must be used. As specified in DOE O 414.1C, the standards used must be specified

by the user and approved by DOE. This Guide provides acceptable implementation strategies and

appropriate standards for these work activities.

1. Software project management and quality planning.

2. Software risk management.

3. Software configuration management (SCM).

8 DOE G 414.1-4

6-17-05

4. Procurement and supplier management.

5. Software requirements identification and management.

6. Software design and implementation.

7. Software safety.

8. V&V.

9. Problem reporting and corrective action.

10. Training of personnel in the design, development, use, and evaluation of safety software.

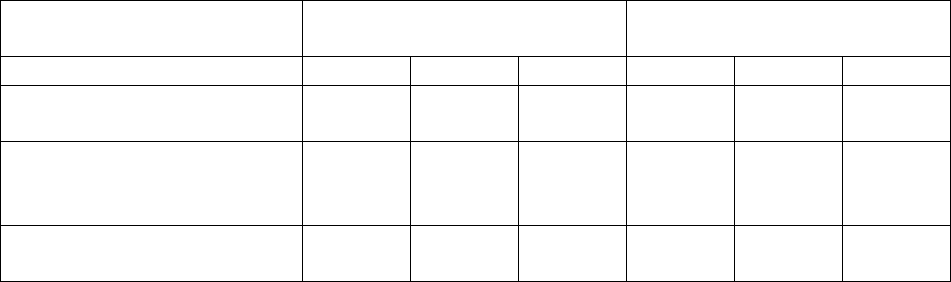

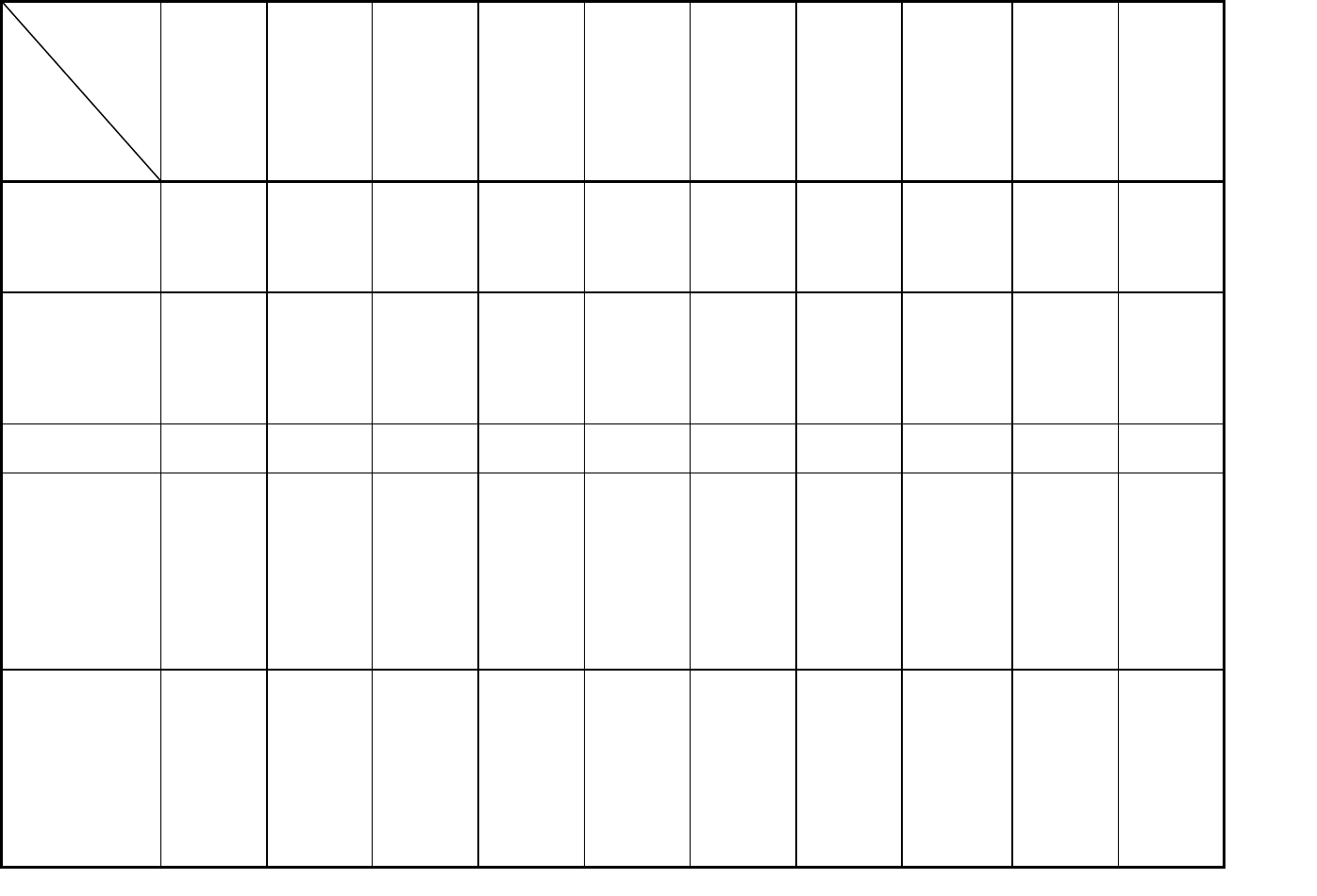

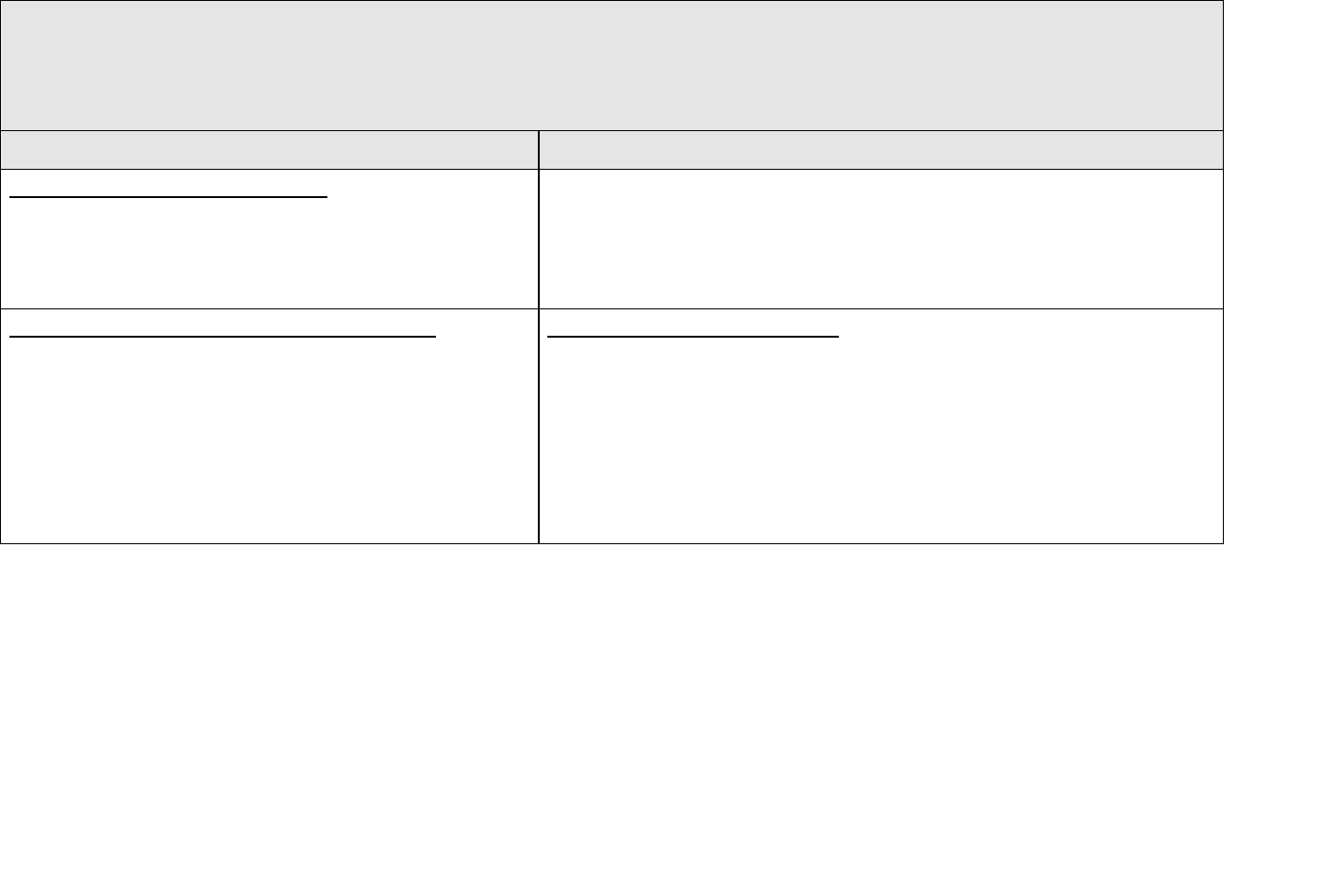

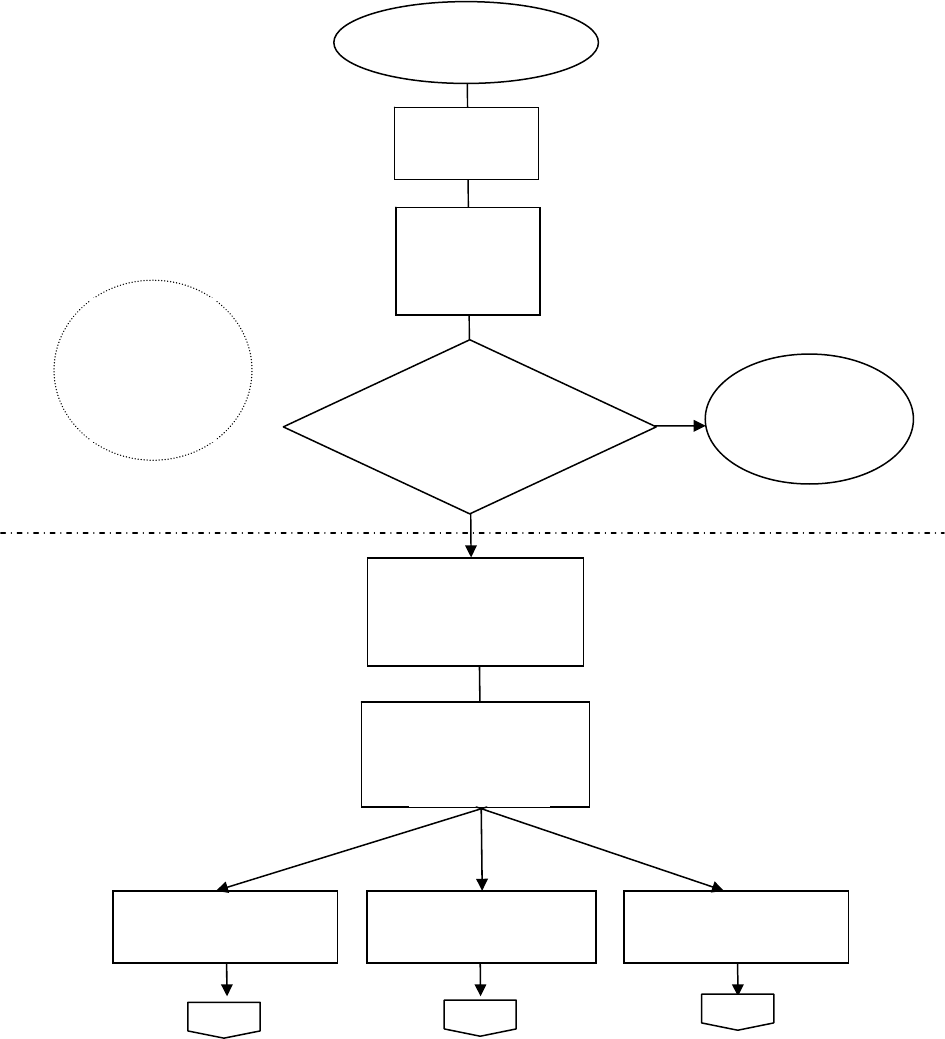

Table 2. Grading Criteria and Facility Categorization Illustration

Nuclear Facilities

1,2 3

Nuclear Facilities

<3

Safety Software A B C A B C

Safety System Software

X X X

Safety & Hazard Analysis

Software & Design

Software

*

X X X X X X

Safety Management &

Admin Controls Software

X X X X

*

Safety and hazard analysis software and design software includes software used to classify facilities. Because this

software is used before the facility classification determination, the safety and hazard analysis software and design

software type has been identified as being applicable for all grading levels in all categories of facilities.

The determination of what constitutes safety software is made by the organization applying the

software based upon the requirements in DOE O 414.1C, and 10 CFR 830 Subparts A and B.

The application of the software determines whether it is safety related and how it should be

graded. Therefore, the organization applying the software is responsible for evaluating and

designating the software as safety software and then ensuring that the software development and

operations have followed the appropriate QA procedures.

3. GENERAL INFORMATION

3.1 SYSTEM QUALITY AND SAFETY SOFTWARE

Maintaining the integrity, safety, and security of all DOE assets and resources is paramount for

DOE’s mission. Since software is an integral part of DOE’s resources, the integrity, safety, and

security attributes of its software resources are critical to DOE’s mission. All three attributes are

interdependent since compromising the security access could obviously present a potential safety

hazard. If the integrity of either the data or application itself has been compromised either

DOE G 414.1-4 9

6-17-05

accidentally or maliciously, safety could be compromised. Therefore when safety software is

being addressed, the integrity and security issues should likewise be addressed.

Other system level issues impacting safety software are the availability of trained and

knowledgeable personnel to develop, maintain, and use the software; human factor issues such as

understandability of the displays or ambient lighting conditions; and potential electromagnetic

interference/radio frequency interference, which should be analyzed. Fault tolerance and

common cause failure issues, performance requirements, and proper identification and analysis

of functional requirements that have safety, security or integrity implications need to be

propagated to the safety software.

From the foregoing, it can be seen that there are several interdependencies and tradeoffs that

should be addressed when integrating software into safety systems. The necessity for robust

software quality engineering processes is obvious when safety software applications are required.

However, just ensuring that a “good” software engineering process or that V&V activities exist

is not sufficient alone to produce safe and reliable software.

7

The life-cycle process should focus

upon the safety issues in addition to the basic software quality engineering principles. Both of

these concepts are detailed in this Guide.

3.2 RISK AND SAFETY SOFTWARE

Software rarely functions as an independent entity. Software is typically a component of a

system much in the same way hardware, data, and procedures are system components. Therefore,

to understand the risk associated with the use of software, the software function should be

considered a part of an overall system function.

The consequences of software faults need to be addressed in terms of the contribution of a

software failure to an overall system failure. Issues such as security, training of operational

personnel, electromagnetic interference, human-factors, or system reliability have the potential

to be safety issues. For example, if the security of the system can be compromised, then the

safety software can also be compromised. Controlling access to the system is key to maintaining

the integrity of the safety software. Likewise, if human factor issues such as ambient lighting

conditions and ease of use for understandability are important, the risks need to be addressed

either via design or training. For PLCs or network safety software applications, electromagnetic

interference could offer potential risks for operation of the safety software system.

Once the software’s function within the overall system’s function is known, the appropriate

software life-cycle and system life-cycle practices can be identified to minimize the risk of

software failure on the system. Rigor can then be applied commensurate with the risk. Managing

the risk appropriately is the key to managing a safety software system. Unless risks and

trade-offs of either doing or not doing an activity are evaluated, there is not a true understanding

of the issues involved regarding the safety software system. Obviously, time and resource

constraints should be balanced with the probability of occurrence and the potential consequences

versus an occurrence of the worst case scenario. If the safety systems staff zealously and

7

Leveson, Nancy, Safeware: System Safety and Computers, Addison Wesley, 1995.

10 DOE G 414.1-4

6-17-05

religiously inappropriately invokes the strictest rigor for a Level B application, then the

application has the potential never to get fielded properly. On the other hand, if the process

activities are only minimally or inappropriately performed for a Level A software safety

application, then very adverse consequences could potentially occur for which no mitigation

strategy exists. Appropriate project management is a risk management strategy and especially

so for safety software applications.

3.3 SPECIAL-PURPOSE SOFTWARE APPLICATIONS

Several categories of software have a unique purpose in safety-related functions required to

support DOE nuclear facility operations. This section contains an overview of the

special-purpose software and the additional considerations that should be addressed by SQA

programs, processes, and procedures.

3.3.1

Toolbox and Toolbox-Equivalent Software Applications

Toolbox codes represent a small number of standard computer models or codes supporting DOE

safety analysis. These codes have widespread use and are of appropriate qualification for use

within DOE. The toolbox codes are acknowledged as part of DOE’s Safety Software Central

Registry. These codes are verified and validated and constitute a “safe harbor” methodology.

That is to say, the analysts using these codes do not need to present additional defense as to their

qualification provided that the analysts are sufficiently qualified to use the codes and the input

parameters are valid. These codes may also include commercial or proprietary design codes

where DOE considers additional SQA controls are appropriate for repetitive use in safety

applications and there is a benefit to maintain centralized control of the codes. The following six

widely applied safety analysis computer codes have been designated as “toolbox” codes.

• ALOHA (chemical dispersion analysis)

• CFAST (fire analysis)

• EPIcode (chemical dispersion analysis)

• GENII (radiological dispersion analysis)

• MACCS2 (radiological dispersion analysis)

• MELCOR (leak path factor analysis)

The current designated “toolbox” codes and any software recognized in the future as meeting the

“toolbox” equivalency criteria are no different from other custom developed safety software as

defined in Section 2.1. Consequently, software of this category should be developed or acquired,

maintained, and controlled applying sound software practices as described in Section 5 of this

Guide.

In the future, new versions of software may be added to the Central Registry while the older

versions are removed. Over time, some of the software may be retired and recommended not to

be used in DOE safety analysis. Still other software may be added through the formal

toolbox-equivalent process, having been recognized as meeting the equivalency criteria. Thus,

the Central Registry collection of safety software applications will be expected to evolve as

DOE G 414.1-4 11

6-17-05

es

re

dditional information on the detailed toolbox SQA procedures, criteria, and evaluation plan; the

entral Registry

software life-cycle phases, usage, and application requirements change. Appendix B address

the process for adding new software applications and versions to, and removal of retired softwa

from, the Central Registry.

A

evaluation of the software relative to current SQA criteria (i.e., assessment of the margin of the

deficiencies or “gap” analysis); user guidance documentation; description of the

toolbox-equivalent process; and code-specific information may be found in the C

portion of the DOE SQA Knowledge Portal (http://www.eh.doe.gov/sqa/central_registry.htm).

3.3.2

Existing Safety Software Applications

E g proved under a QA program consistent with

,

xisting safety software should be identified and controlled prior to evaluation using the graded

est

to

3.4 CONTINUOUS IMPROVEMENT, MEASUREMENT, AND METRICS

Lord Kelvin stated “If you can not measure it, you can not improve it.” This truism especially

OE O 414.1C criterion 3, Quality Improvement, specifies that processes should be established

xistin software that has not been previously ap

DOE O 414.1C and has been identified as safety software should be evaluated using the graded

approach framework that is described in Section 5. This software is often referred to as legacy

software. In many cases this category of software originally met DOE or industry requirements

but SQA for existing software was not updated as the SQA standards were revised.

E

approach framework in this Guide. The evaluation performed should be adequate to address the

correct operation of the safety software in the environment it is being used. This evaluation

should include (1) identification of the capabilities and limitations for intended use, (2) any t

plans and test cases required demonstrating those capabilities, and (3) instructions for use within

the limitations.

8

One example of this evaluation is a posteriori review

9

as described in American

Nuclear Society (ANS) standard, ANS 10.4. Future modifications to existing safety software

should meet all safety software work activities in DOE O 414.1C associated with the changes

the safety software.

10

applies to safety software systems. Metrics used throughout the life-cycle should bolster the

confidence that the software applications will achieve their mission in a safe and reliable manner.

If design, testing, or software reliability measures are unknown, then there is no assurance that

the safety software has sufficient robustness to minimize the risks.

D

and implemented to detect and prevent problems. Measurements and the metrics developed from

these measures can be indicators for potential future problems, and thus, steps can be initiated to

prevent the occurrence. For long term avoidance of problems, continuous improvement methods

should be implemented to determine the root causes and eliminate the events that could lead to a

8

ASME NQA-1-2000, op. cit., Part II, Subpart 2.7, Section 302, p. 105.

9

American National Standards Institute (ANSI)/American Nuclear Society (ANS) 10.4-1987 (R1998), Guidelines

for the Verification and Validation of Scientific and Engineering Computer Programs for the Nuclear Industry,

ANS, 1998, Section 11, pp. 29–32.

10

Lord Kelvin ( Sir William Thomson, 1824–1907)

12 DOE G 414.1-4

6-17-05

e

dards to develop and

xperts

ME is the nationally accredited body for the development of nuclear

part A

he

11

the requirements generally apply to safety software

l,

r

rk

ce

other standards useful in achieving compliance

ll the

applications.

reoccurrence. Metrics further provide qualitative or quantitative indicators of the improvements

or lack thereof when a process or work activity has been modified. Metrics are the evidence that

an improvement has occurred. Both Institute of Electrical and Electronic Engineers (IEEE)

Standards 982.1 and 982.2 provide recommendations for what metrics to use and when in th

software life-cycle phase applying the metric is most appropriate.

3.5 USE OF NATIONAL/INTERNATIONAL STANDARDS

Title 10 CFR 830 Subpart A and DOE O 414.1C require the use of stan

implement a QAP. National/international standards facilitate a common software quality

engineering approach to developing or documenting software based upon a consensus of e

in the particular topic areas. Many national and international standards bodies have developed

software standards to ensure that the recognized needs of their industry and users are

satisfactorily met.

In the United States, AS

facility QA standards. DOE O 414.1C cites ASME NQA-1-2000 or other national or

international consensus standards that provide an equivalent level of quality assurance

requirements as ASME NQA-1-2000 as the appropriate standard for QAPs applied to

nuclear-related activities (e.g., safety software). The ten QA criteria in 10 CFR 830 Sub

and DOE O 414.1C are mapped to ASME NQA-1-2000 in Appendix C. DOE O 414.1C also

requires that additional standards be used to address specific work activities conducted under t

QAP, such as safety software.

In the case of ASME NQA-1-2000, Part I,

work activities. For example, Requirements 3, 4, 7, 11, 16, and 17 for Design Control,

Procurement Document Control, Control of Purchased Items and Services, Test Contro

Corrective Action, and Quality Assurance Records (respectively) can have specific safety

software applicability. In addition, ASME NQA-1-2000, Part II, Subpart 2.7, and Part IV,

Subpart 4.1, specifically address “quality assurance requirements for computer software fo

nuclear facility applications” and “guide on quality assurance requirements for software”

(respectively). As stated in the introduction of Part II, Subpart 2.7, this subpart “provides

requirements for the acquisition, development, operation, maintenance, and retirement of

software.” Table 3 provides a cross reference of ASME NQA-1-2000 with the ten SQA wo

activities in DOE O 414.1C. Although ASME NQA-1-2000 provides excellent process guidan

for a software quality engineering process for managing a software development, maintenance,

or procurement or otherwise acquiring software, the detailed guidance for safety software is not

provided within this standard.

Appendix D of this Guide includes references to

with 10 CFR 830 Subpart A and DOE O 414.1C for safety software work activities. It should be

noted that the use of the standards discussed can aid in the development of a robust safety

software quality engineering process and a resulting software product that is adequate for a

safety software applications. Use of consensus standards can promote a robust safety software

quality engineering process and a resulting software product that is adequate for safety software

11

ASME NQA-1-2000, op.cit., Part I.

DOE G 414.1-4

6-17-05

13

Recognizing that there are five OE listed in

Section 2.1, the safety software analyst needs a defined process to enable a determination of

w

fety

5.1 SOFTWARE SAFETY DESIGN M

Safety should be designed into a system, just as quality should be built into the system. Safe

n nt, uses two primary approaches:

(

h

ces is

the first approach to developing high quality software systems. These practices can be

a

e

e more integrated model, Capability Maturity

M

12

Leveson, op. cit., p. 398.

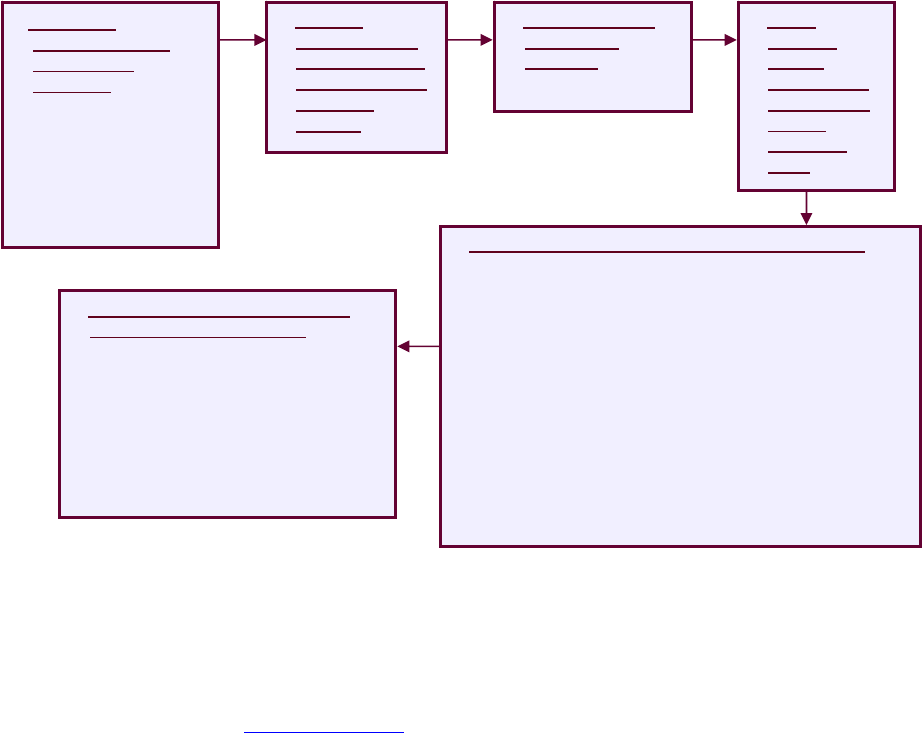

4. RECOMMENDED PROCESS

safety software applications types within D

hat needs to be accomplished for each of the respective software safety applications. In

addition, the safety software analyst needs a process to support the integration of software sa

into the system safety process to improve system and software design, development and test

efforts. Lastly, the process to manage each of the five application types should support the

planning and coordination of the software safety tasks based on established priorities. Appendix

E of this Guide presents the details of a risk-based graded approach for the analysis and safety

software management process for (1) custom developed, (2) configurable, (3) acquired,

(4) utility calculations, and (5) commercial design and analysis tools.

5. GUIDANCE

ETHODS

desig of a system, in which safety software is a subcompone

1) applying good engineering practices based upon industry proven methods and (2) guiding

design through the results of hazard analysis. Identifying and assessing the hazards is not enoug

to make a system safe. The information from hazard analysis needs to be factored in the

design.

12

Applying industry accepted software engineering and software quality engineering practi

generally

pplied to safety software to improve the quality and add a level of assurance that the software

performs its safety functions as intended. DOE O 414.1C requires SQA work activities, referred

to as work activities, to be performed for safety software. Many national and international

consensus standards, such as ASME NQA-1-2000, ANS 10.4, and the IEEE software

engineering series provide detailed guidance for performing the work activities. Section 3.5 of

this Guide describes some of these standards.

Software process capability models such as the Software Engineering Institute’s legacy Softwar

Capability Maturity Model (SW-CMM) and th

odel Integration (CMMI), are proven tools to assist in the selection of practices to perform for

achieving a level of assurance that the processes performed will produce the desired level of

quality for safety software. The CMMI has two approaches: staged and continuous. For

organizations introducing a software process improvement program, these models should be

considered.

14 DOE G 414.1-4

6-17-05

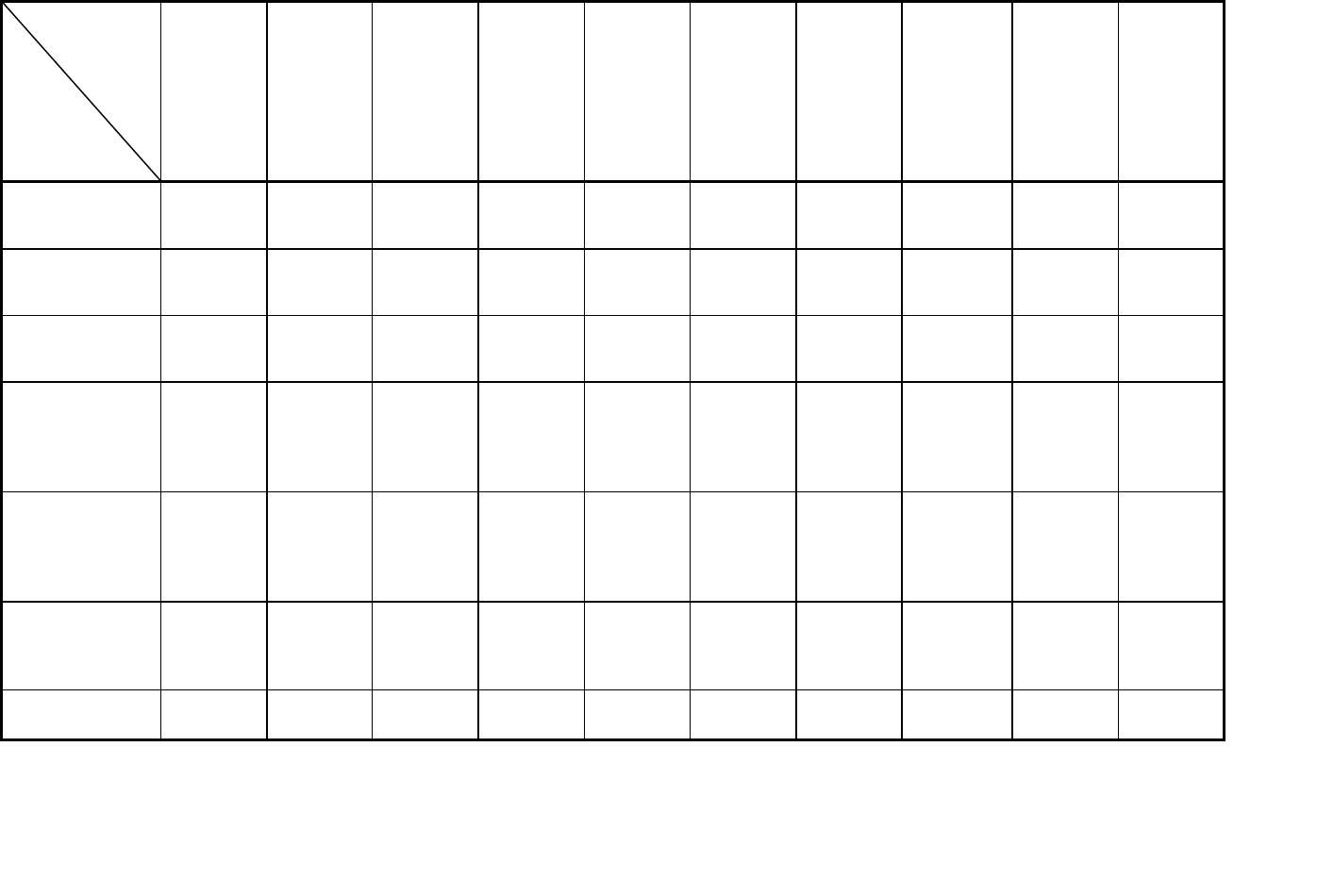

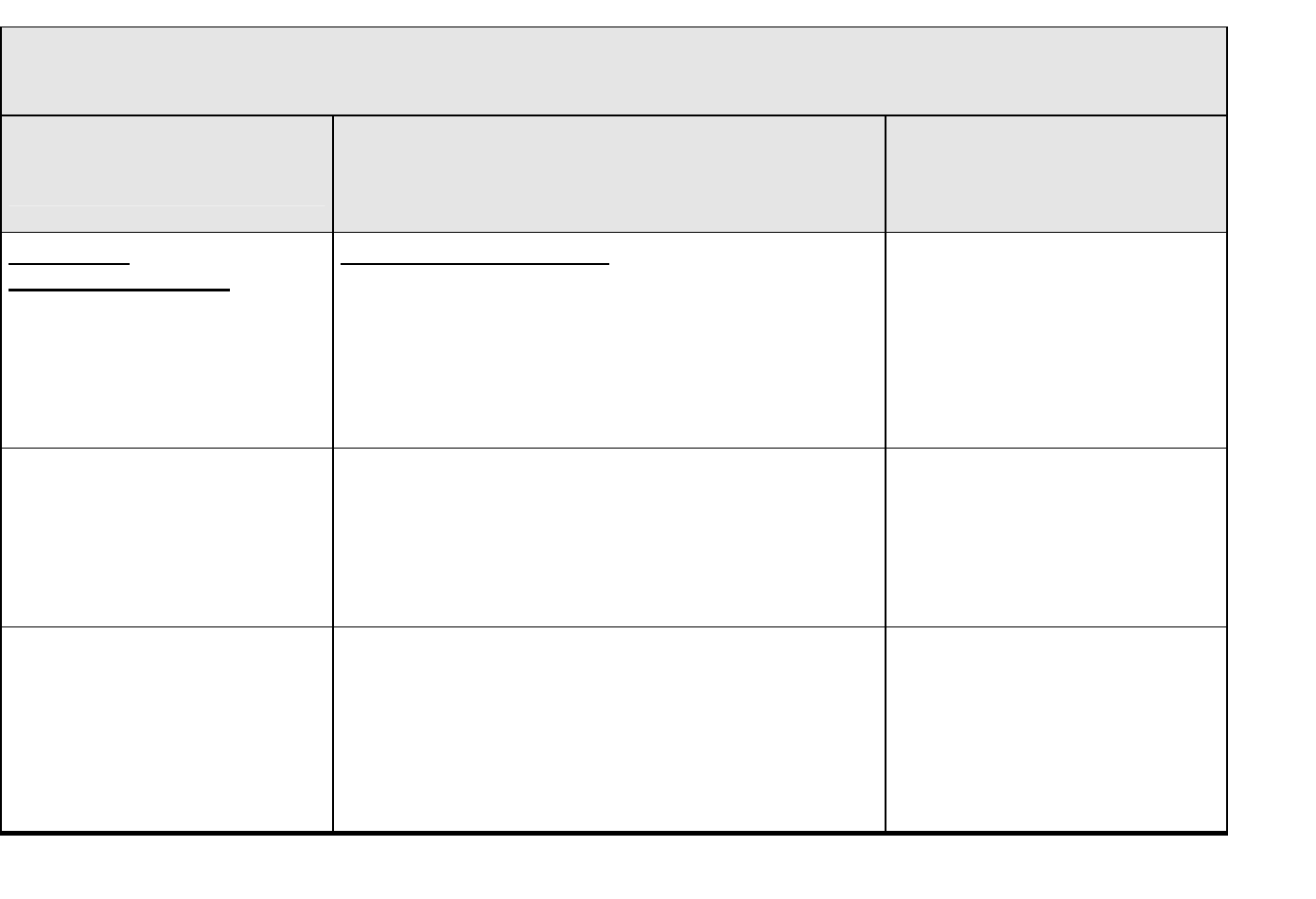

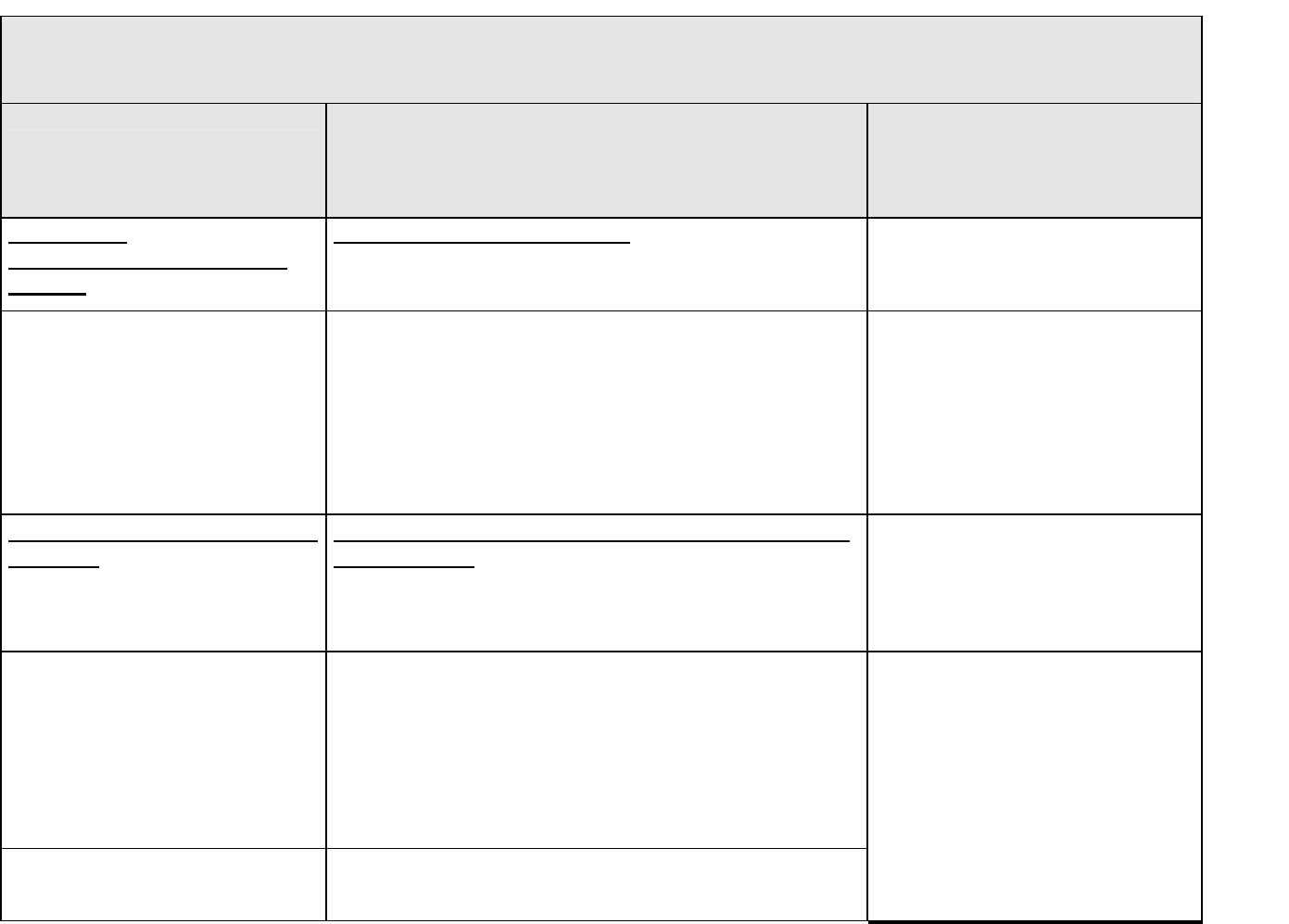

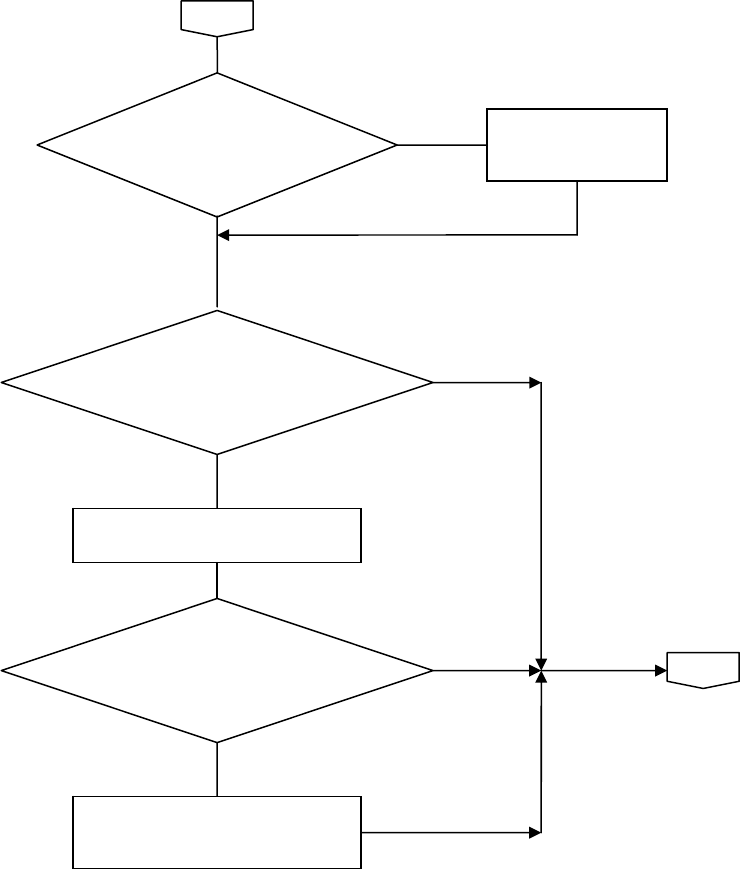

Table 3. ASME NQA-1-2000 Cross Reference to DOE Software Quality Assurance (SQA) Work Activities

SQA Work

Activities

ASME

NQA-1

Software (sw)

project management

& quality planning

Sw risk

management

Sw configuration

management

Procurement &

supplier

management

Sw requirements

identification &

management

Sw design &

implementation

Sw safety

Verification &

validation

Prblm reporting &

corrective action

Training of

…

safety sw

Organization Req. 1,

1A-1,

200

2A-2, 301

Quality

Assurance

Program

SP 2.7,

400

2A-2, 300

1A-1,

200

SP 2.7,

400

2A-2, 301,

502

Req. 1

Req. 2,

100

SP 2.7,

400

SP 4.1,

400

Req. 2

SP 2.7,

402

1A-1, 303 Req.

2A-2

2,

, 600

Design Control SP 2.7,

400

SP 4.1,

101, 200,

404, 406

Req. 3,

802

SP 2.7,

203

SP 4.1,

203

SP 2.7,

300

SP 4.1,

300

Req. 3,

800

SP 2.7,

400

SP 4.1,

400

Req. 3,

800

SP 2.7,

400

SP 4.1,

400

Req. 3,

800

SP 2.7,

402

SP 4.1,

100

Req. 3,

801.4,

801.5

Req. 11,

400

SP 2.7,

402.1, 404

SP 4.1,

402.1, 404

Req. 15

Req. 16

SP 2.7,

204

SP 4.1,

204

Procurement

Document

Control

Req. 4

Instructions,

Procedures, and

Drawings

Req. 5 Req. 5

Document

Control

Req. 6

SP 2.7,

201

SP 4.1,

201

Req. 6

SP 2.7,

201

SP 4.1,

201

DOE G 414.1-4 15

6-17-05

SQA Wo

Activities

SME

NQA-1

w)

ng

nt

n

nt

nt

nt

&

n

on

…

rk

A

Software (s

project management

& quality planni

Sw risk

manageme

Sw configuratio

manageme

Procurement &

supplier

manageme

Sw requirements

identification &

manageme

Sw design

implementatio

Sw safety

Verification &

validation

Prblm reporting &

corrective acti

Training of

safety sw

Cont

Purc ms

S

00

SP 4.1,

300

rol of

hased Ite

and Services

Req. 7,

P 2.7,

3

Identific

Control of It

ation and

ems

eq. 3,

802

SP 2.7,

203

SP 4.1,

203

R

Control of Special

Processes

Inspection

,

402.1, 404

Req. 3,

801.4,

801.5

Req.

11

400

SP 2.7,

402.1, 404

SP 4.1,

Test Control Req. 3,

801.4,

801.5

Req. 11,

400

SP 2.7,

402.1, 404

SP 4.1,

402.1, 404

16 DOE G 414.1-4

6-17-05

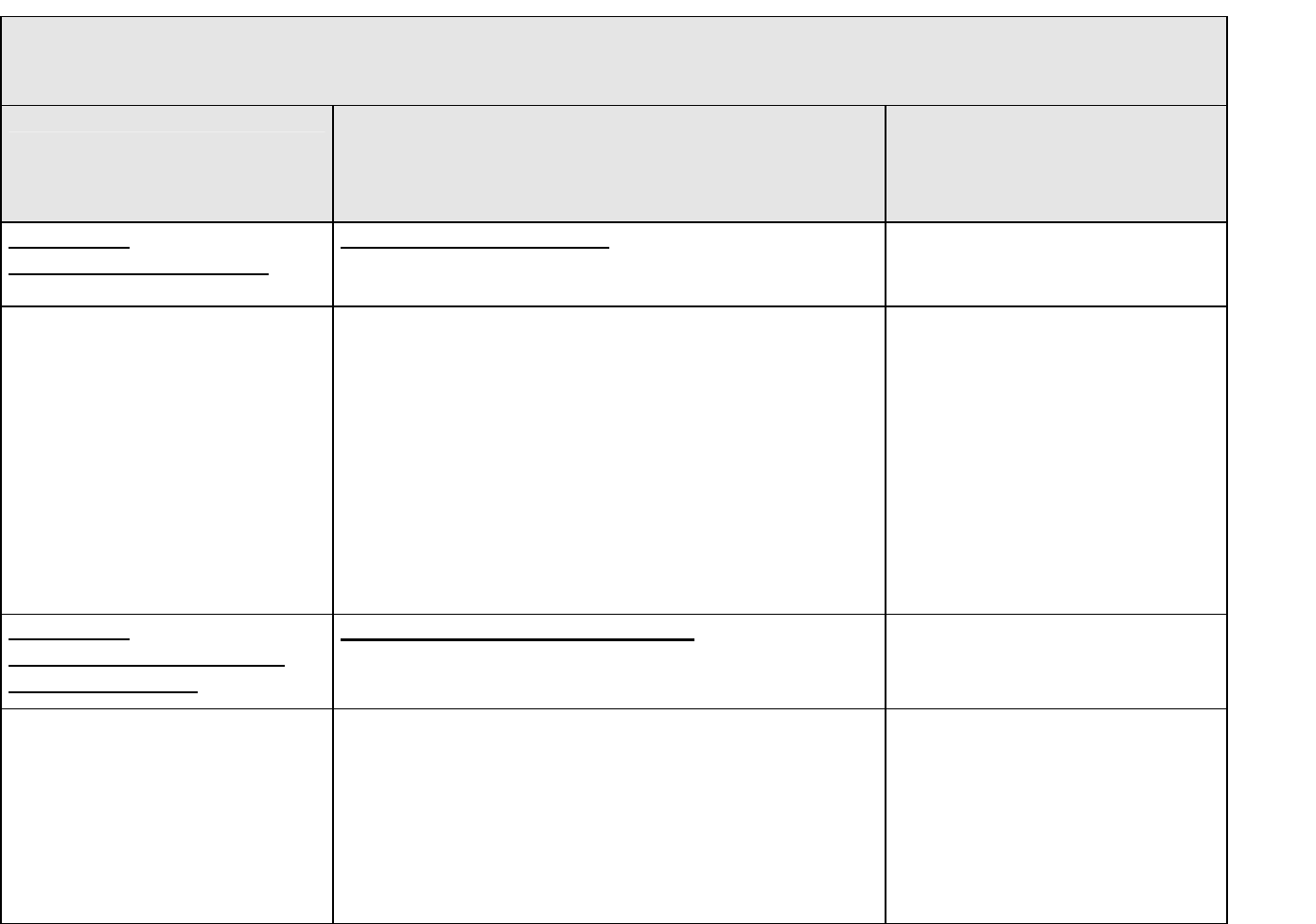

SQA Work

Activities

ASME

NQA-1

Software (sw)

project management

& quality planning

Sw risk

management

Sw configuration

management

Procurement &

supplier

management

Sw requirements

identification &

management

Sw design &

implementation

Sw safety

Verification &

validation

Prblm reporting &

corrective action

Training of …

safety sw

Control of

Measuring and

Test Equipment

SP 4.1,

101.3

Handling,

Storage, and

Shipping

Inspection, Test

and Operating

Status

Control of

Nonconforming

Req. 15

SP 2.7,

04

SP 4.1,

04

Items 2

2

Corrective Action Req. 16

SP 2.7,

204

SP 4.1,

204

Quality

Assurance

Records

SP 2.7,

201

SP 4.1,

01

2

Audits Req. 1 8

DOE G 414.1-4

6-17-05

For safety s

a

s

17

ystems, hazards and accident analyses are performed at the system level and then for

ny subcomponent of the system that potentially could have an adverse effect on safety. Since

oftware is a subcom , hazard analys afety software is

performed. Hazard analysis is best performed periodically throughout the life-cycle of the safety

software development and operations to reassess h s safety of the system and its

software. The information from these hazard an ke design decisions related to

the safety software and its associated safety system.

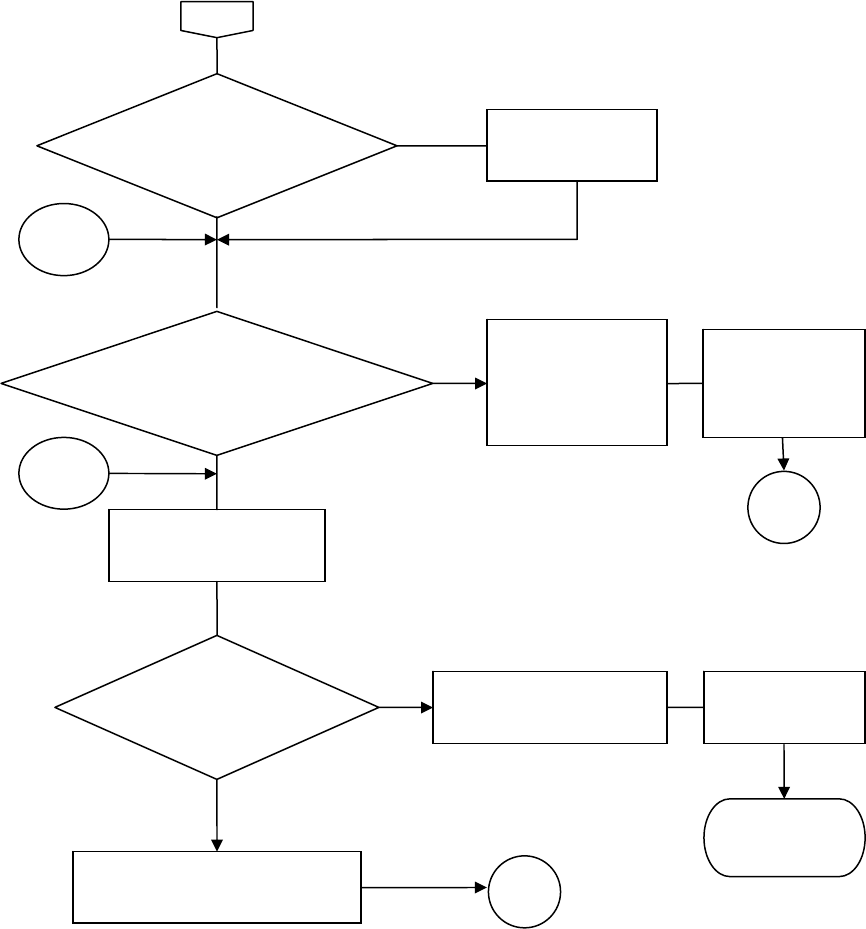

5.2 SOFTWARE WORK ACTIVITIES

Software should be lled in a traceable, planned, and orderly manner. The safety software

quality work activities defined in this section provide the basis for planning, implementing,

maintaining, and operating safety software. The work activities for safety software include tasks

such as software project planning, SCM, and risk analysis that cross all phases in the life-cycle.

Additionally, the work activities include tasks that are specific to a life-cycle phase. These work

activities cover tasks during the development, maintenance, and operations of safety software.

The work activities should be implemented based upon the graded level of the safety software

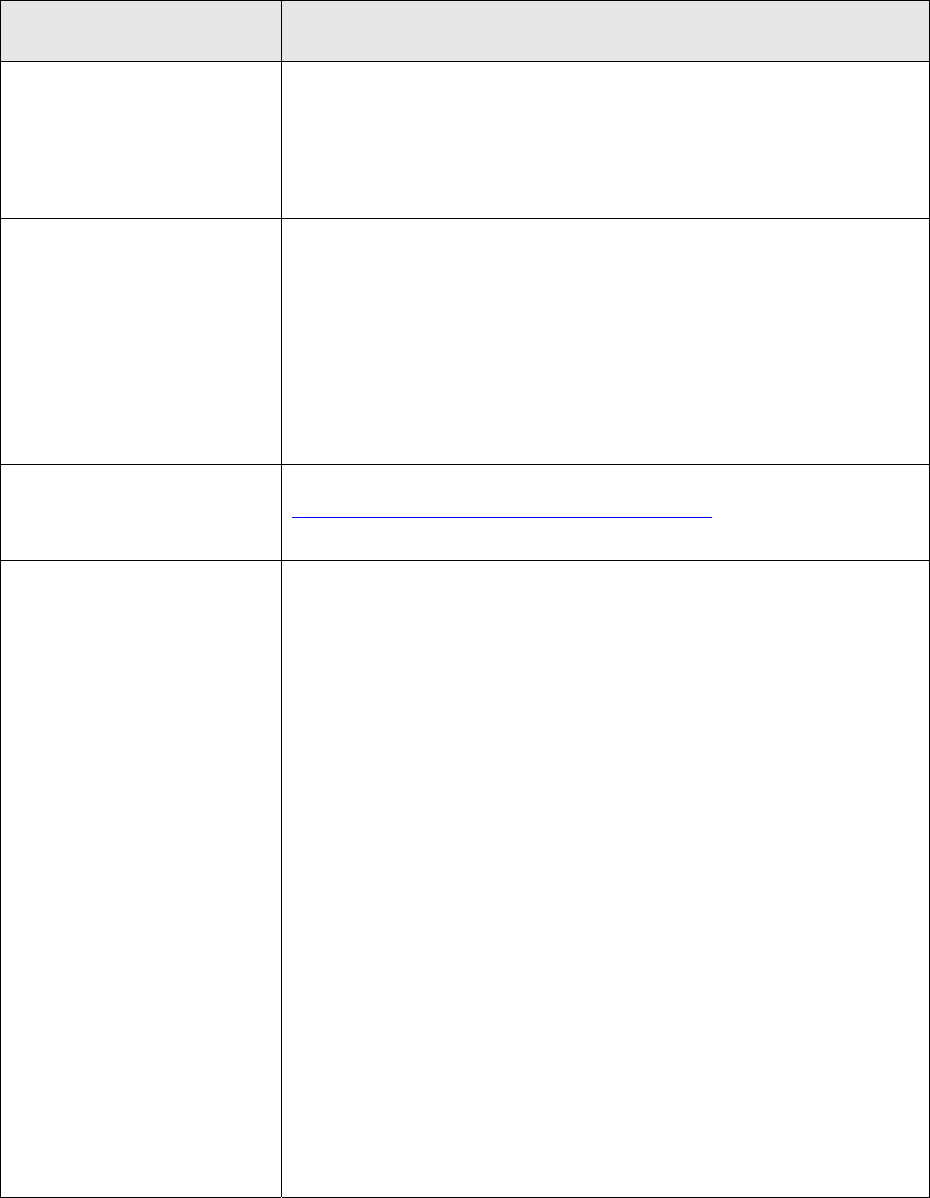

and the applicable software type. Table 4 provides a summary of the mapping between software

type, the grading levels, and the ten SQA work activities. Not all work activities will be

applicable for a particular instance of safety software. This Guide indicates where these work

activities may be omitted. However, the best judgment of the software quality engineering and

safety system staffs should take precedence over any optional work activities presented in this

Guide.

5.2.1

Software Project Management and Quality Planning

As with any system, project management and quality planning are key elements to establishing

the foundation to ensure a quality product that meets project goals. For software, project

management starts with the system level project management and quality planning. Software

specific tasks should be identified and either included within the overall system planning or in

separate software planning documents.

These tasks may be documented in a software project management plan (SPMP), an SQA plan

(SQAP), a software development plan (SDP), or similar documents. They also may be embedded

in the overall system Typically the SPMP, SQAP, and/or SDP are the

controlling documents that define and guide the processes necessary to satisfy project

requirements, including the software quality requirements. These plans are initiated early in the

project life-cycle and are maintained throughout the life of the project.

The software project management and quality planning should include identifying all tasks

associated with the sof devel ent and ent of services,

ponent of the system is specific to the s

the

yses

aza

is

rd

used to m

and

aal

contro

level planning documents.

13

tware opm procurement, including procurem

ration (CM

ng (CM

Mellon U

13

Capability Maturity M m ability Matu tegration, Version

1.1, CMMI for Softw , Stag resentatio -2002-TR-029,

ESC-TR-2002-029, C e Eng ng Institu .

odel Integ

are Engineeri

arnegie

MI) Pro

MI-S

niversity

duct Tea

W, V1.1)

, Softwar

, Cap

ed Rep

ineeri

rity Mod

n, CMU

te, 2002

el In

/SEI

18 DOE G 414.1-4

6-17-05

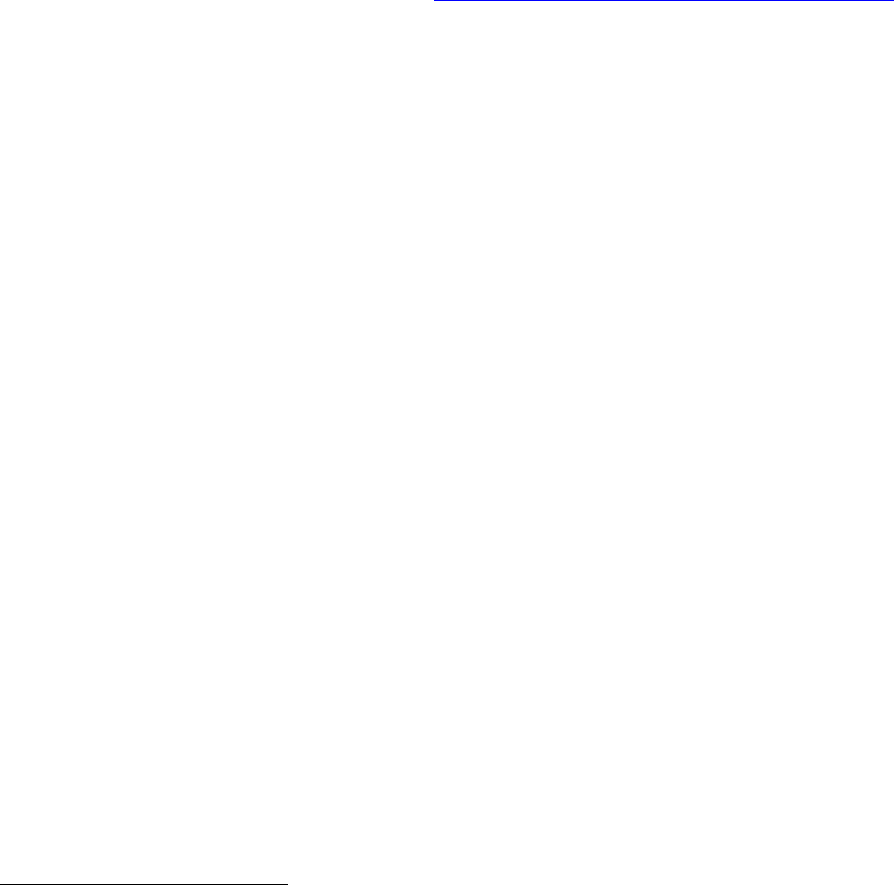

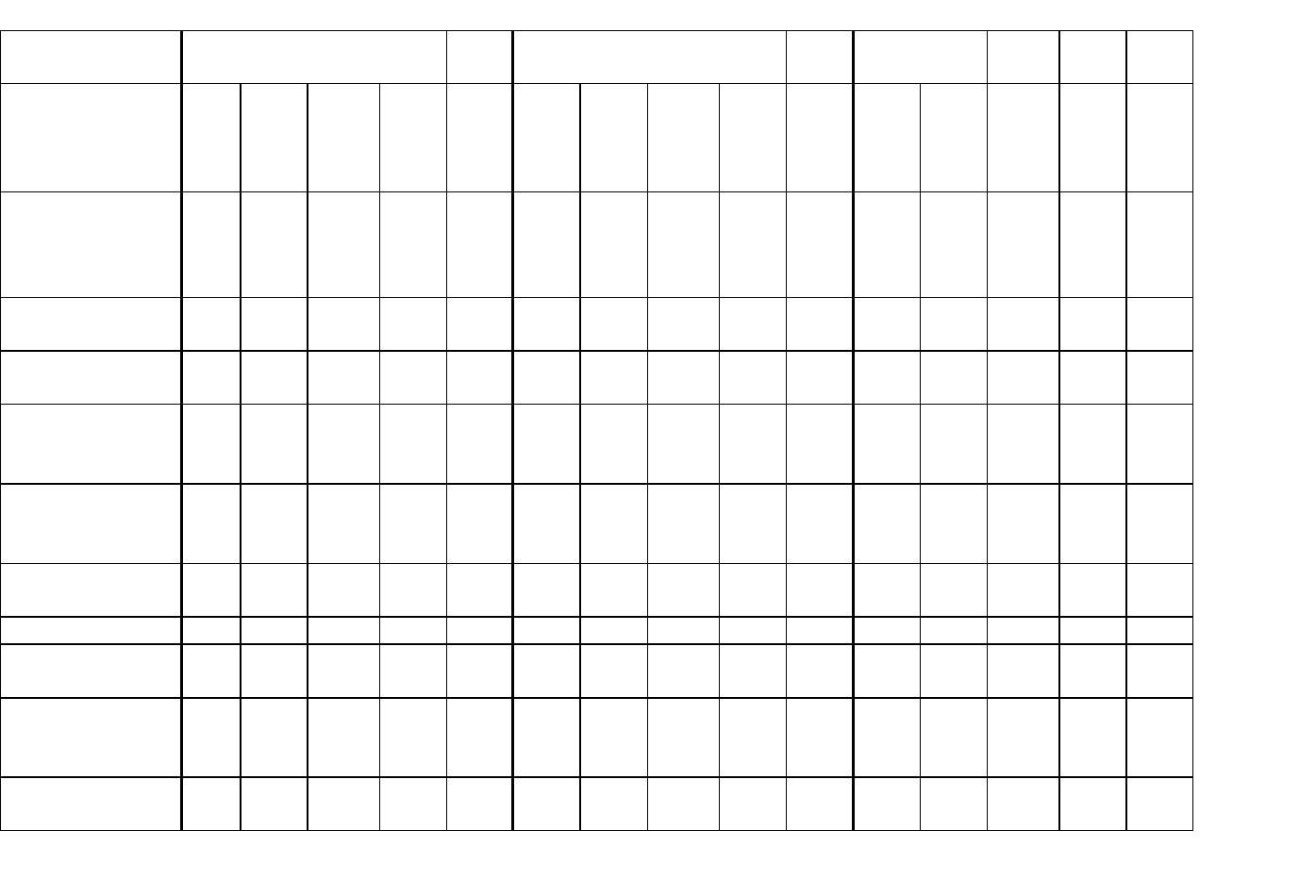

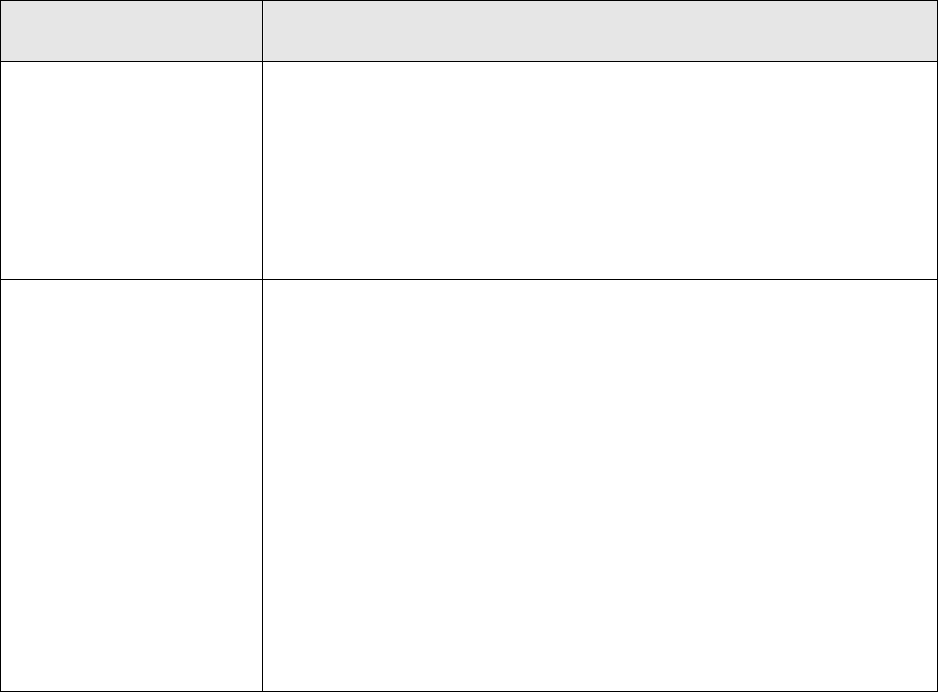

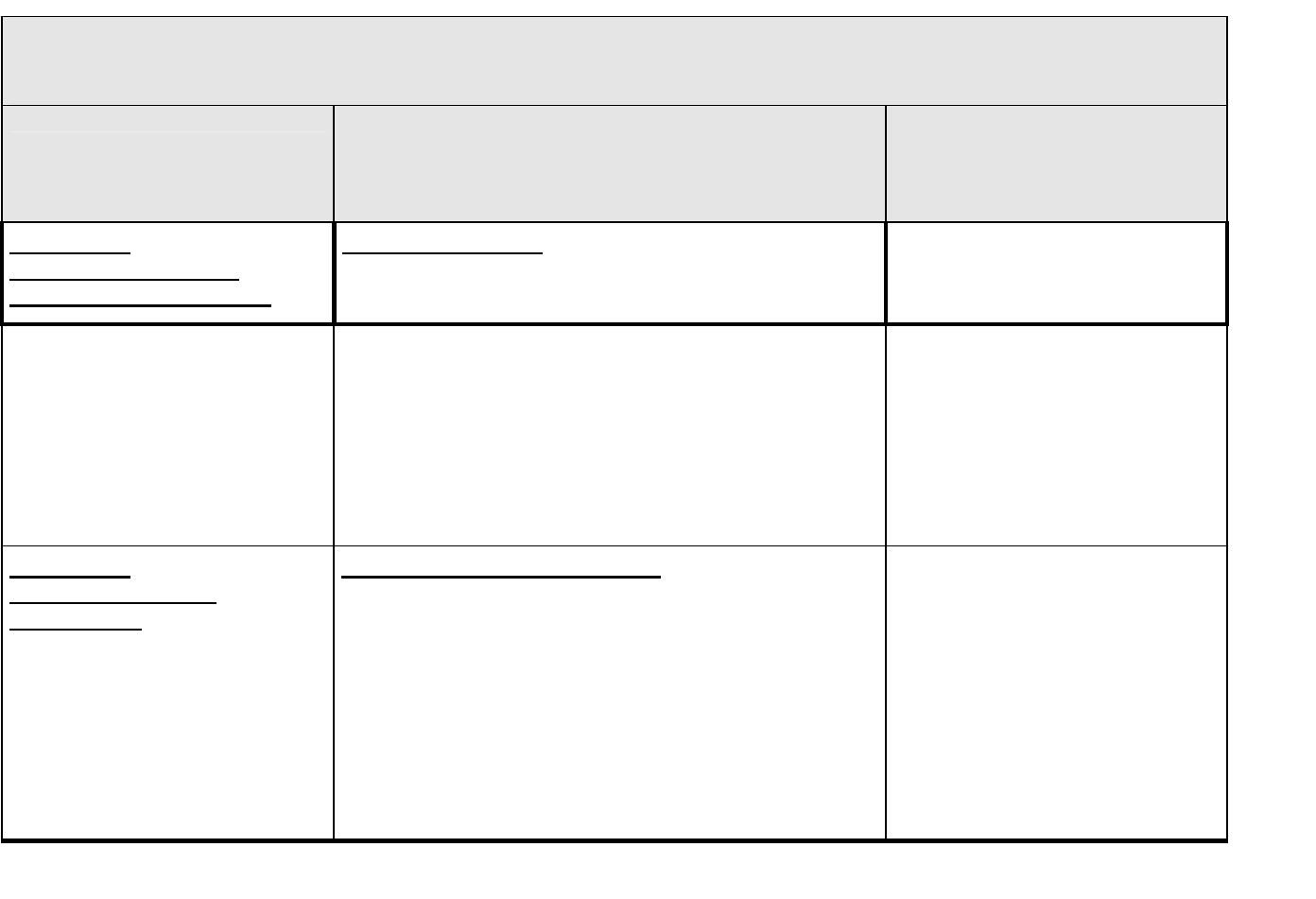

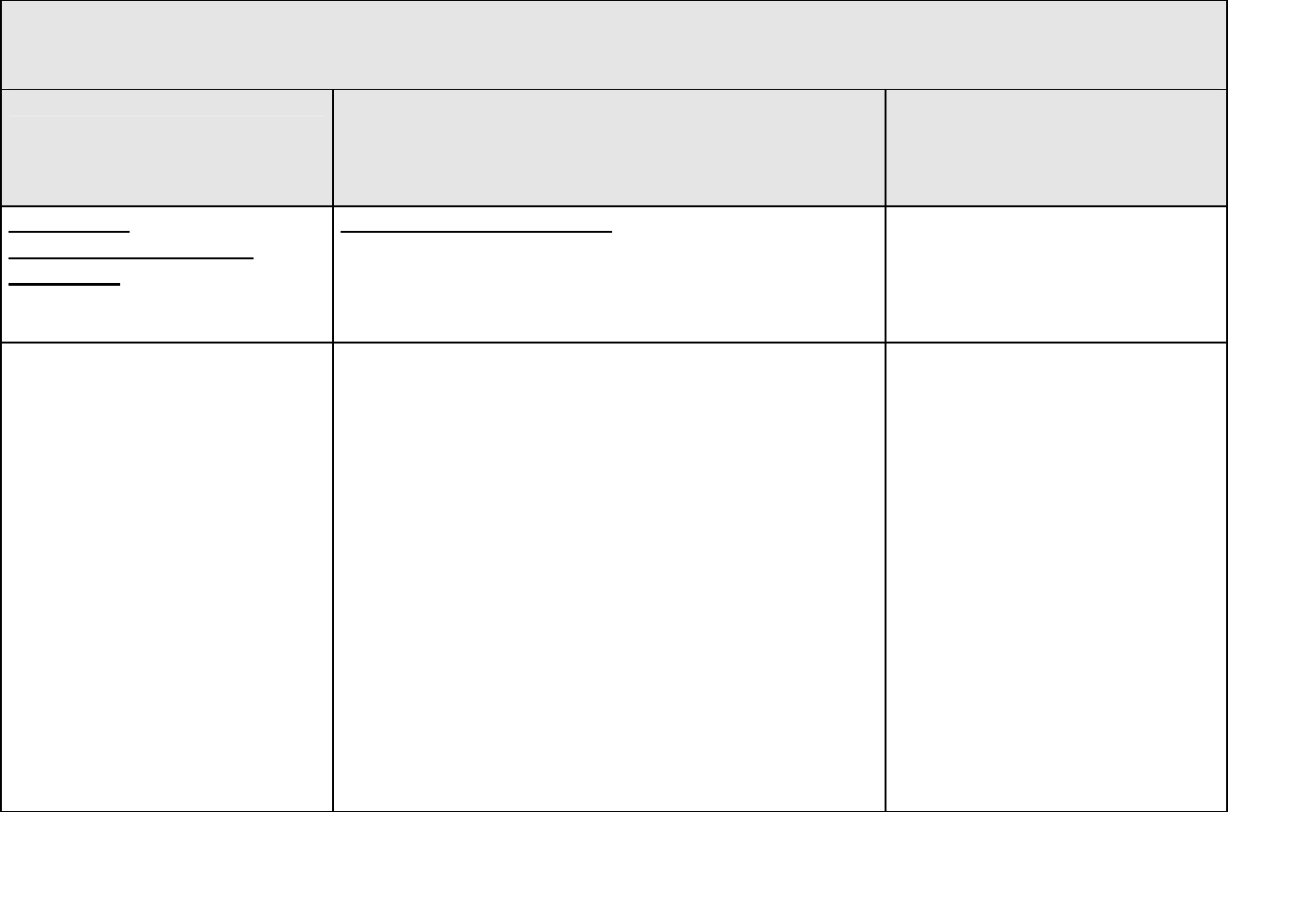

Table 4. Mapping Safety Software Types and Grading Levels to Software Quality Assurance (SQA) Work Activities

SQA Work

Activity

Level

A

Level

B

Level

C

Custom

Developed

Configurable

Acquired

Utility Calcs

Commercial

D & A

Custom

Developed

Configurable

Acquired

Utility Calcs

Commercial

D & A

Custom

Developed

Configurable

Acquired

Utility Calcs

Commercial

D & A

Software (Sw)

project

management &

quality planning

Full Full Grade Grade n/a Full Full Grade Grade n/a Grade Grade Grade Grade n/a

Sw risk

management

Full Full Full Full n/a Grade Grade Grade Grade n/a Grade Grade Grade Grade n/a

Sw configuration

management

Full Grade Grade Grade Grade Full Grade Grade Grade Grade Grade Grade Grade Grade Grade

Procurement &

supplier

management

Full Full Full Full Full Full Full Full Full Full Full Full Full Full Full

Sw requirements

identification &

management

Full Full Full Full Full Full Full Full Full Full Full Full Full Full Full

Sw design &

implementation

Full Grade n/a Grade n/a Full Grade n/a Grade n/a Full Grade n/a Grade n/a

Sw safety

Full Full Full n/a n/a Grade Grade Grade n/a n/a Grade Grade Grade n/a n/a

Verification &

Validation

Full Full Full Grade n/a Grade Grade Grade Grade n/a Grade Grade Grade Grade n/a

Problem

reporting &

corrective action

Full Full Full Grade Full Full Full Full Grade Full Full Grade Grade Grade Grade

Training of …

safety Sw

Full Full Full Full n/a Grade Grade Grade Grade n/a Grade Grade Grade Grade n/a

DOE G 414.1-4 19

6-17-05

estimate of the duration of the tasks, resources allocated to the task, and any dependencies. The

planning sh n of the tasks and y rele t i

A-1-20 nsensus nda s

14

planning documents that are

d resources to ass in the id fic n and

curem

quality and tware de op nt ning ntifies and th ftwa hases

grad QA and software developme ctivit pe d during software

elopment or maintenance. The software quality and softwa ng ng ivitie d rigor

lem e dependent on the identified ading el ty software and the

ity of DOE or its contractors to build quality in and assess the quality of the safety software.

ause SQ are ove basic quality and ftware gi

vities, suc risk ma , p lem r rting co e ons, V&V,

uding software reviews and testing, may be further detailed in separate plans. These plans

ac ed in th pl will be discussed la id

tware project management and quality planning fully apply custo ev ped a

figura pes for b L l A d Lev saf o F evel nd

B acquired and utility calculation and all Level C software applications, software project

ent and qua plannin sk n rade his grading i de th

catio g of all if t ware tasks. W i s e so re

-cycle may include little or n ftware development activities, the software project and

pl st likely be part of the overall system el o ility nning.

s work not apply co er l design and analysis software because the

ject man quality ni ac ties as ciated th

lysis softw med by the service supplier. DOE controls the SQA activities of that

ware through procurement agreements and specifications.

.2

So anage t

tware risk manag nt provides a disciplined environmen r proa e ision

tinuou t can go n ete ine w risks im t t dres d

ent actions to address those risks.

16

Because risk management is such a fundamental tool

t managem an gr ar softw proje a en lthou

mes associated with safety analysis of tentia ilures ftw k nagement

ses on the risks to the successful c pletion of the software project. The risks addressed by

work ject ma em ri associated with the successful completion of a

ware ap hereas Section 5.2.7 addresses the risks associated with the potential

modes of the software.

ould include a descriptio

00, several

an van nformation. In addition to

NQ

goo

pro

Sof

and

dev

of i

abil

Bec

acti

incl

and

Sof

con

Lev

ma

ide

life

qua

Thi

pro

ana

soft

5.2

Sof

con

imp

for

som

focu

this

soft

fail

co sta rd

,15

provide details of

ist enti atio and description of the software development

ent tasks.

tware

any

sof

e S

vel me plan ide

nt a

gui

rfor

des

me

e so re p

ing of th ies

re e

lev

ine

of

eri

safe

act s an

mp entation will b gr

AP and S

h as SC

DP

M,

rall

nagem

so

epo

en

and

nee

rre

ring plans, some quality

ctivent rob acti and

the tivities identifi ese ans ter in this Gu e.

to

ety s

m d

re.

elo

or L

nd

A able software ty oth eve an el B ftwa

el

nagem

ntifi

lity

ckin

g ta

sign

s ca

ican

be g

soft

d. T sh

nsta

ould

nce

nclu

of th

e

ftwan and tra here

o so

to

lity anning will

activity do

mo

es

lev project r fac pla

mm cia

agement a

are are

nd

perfor

plan ng tivi so wi commercial design and

ftware Risk M men

eme

ha

t fo

are

ctiv

rtan

dec

o ad

making to

s, ansly assess w wro g, d rm hat po

lem

projec

eti

ent, it is inte al p t of

po

are

l fa

ct m

, so

nag

ar

em

e ris

t. A

ma

gh

om

ent activity are pro nag sks

plication w

ure

nd E ctronic E

EE, 1998.

EE Standard f

eference Doc

ers Software Q

14

In

M

15

IE

16

S

stitu

anagem

EE Std

QAS21.0

nergy Q

te of Electrical a le n St So

ent Plans, IE

730-2002, IE

1.00-1999 (

E uality Mana

gine

or Soft

ument)

uality Assu

ers (IEE

ware Q

, Softw

ra

E), d 1058

uality Ass

are Risk M

nce Subco

-1998,

urance Pla

anagemen

mmittee, d

IEEE S

ns, IEE

t: A Pr

ated 2-

tand

E, 20

acti

200

ard for

02.

cal Gu

0.

ftware

ide, Depart

Project

ment of

R

g

20 DOE G 414.1-4

6-17-05

k

g

isks throughout all phases of the project’s life-cycle should include special

mphasis on tracking the risks associated with costs, resources, schedules, and technical aspects

r

clude—

• using unproven computer and software technologies such as programming languages not

i ;

The risks associated with the safety software applications need to be understood and

documented. The above bulleted list identifies a few potential risks associated with safety

software applications. Each risk should be evaluated against its risk thresholds. Different

Risk assessment and risk control are two fundamental activities required for project success. Ris

assessment addresses identification of the potential risks, analysis of those risks, and prioritizin

the risks to ensure that the necessary resources will be available to mitigate the risks. Risk

control addresses risk tracking and resolution of the risks. Identification, tracking, and

management of the r

e

of the project. Several risk identification techniques are described and detailed in standards and

literature.

17,18

Risk resolution includes risk avoidance, mitigation, or transference. Even the small risks during

one phase of the safety software application’s life have the potential to increase in some other

phase of the application’s life with very adverse consequences. In addition, mitigation actions fo

some risks could create new (secondary) risks.

Examples of potential software risks for the safety software application might in

• incomplete or volatile software requirements;

• specification of incorrect or overly simplified algorithms or algorithms that will be very

difficult to address within safety software;

• hardware constraints that limit the design;

• potential performance issues with the design;

• a design that is based upon unrealistic or optimistic assumptions;

• design changes during coding;

• incomplete and undefined interfaces;

ntended for the target application

• use of a programming language with only minimal experience using the language;

• new versions of the operating system;

• unproven testing tools and test methods;

• insufficient time for development, coding, and/or testing;

• undefined or inadequate test acceptance criteria; and

• potential quality concerns with subcontractors or suppliers.

Christensen, Mark J., and Richard

17

H. Thayer, The Project Manager’s Guide to Software Engineering’s Best

, pp. 417–447. Practices, Institute of Electrical and Electronics Engineers Computer Society Press, 2001

18

Society of Automotive Engineers (SAE) JA1003, Software Reliability Program Implementation Guide, SAE

2004, Appendix C4.6.

DOE G 414.1-4 21

6-17-05

isks.

ritized, resolved to an acceptable level of risk, and tracked through the life of the

fety software. For Level B or Level C software applications, the granularity for the risks to be

n

determine a graded approach for resolving the

sks and the process for tracking the risks.

This work activity does not apply to commercial design and analysis safety software because this

alysis services provided to DOE from a

rform

procure n or analysis requirements.

ng risk management is provided by IEEE

andar nce regarding the risk management of

isting organizational

k ma 1.00-1999, Software Risk Management: A Practical

risk transference, and risk avoidance that may be of

erest

2.3

ines

ange control process.

20

The following four areas

anagement:

control, (3) configuration status accounting,

E NQA-1-2000 software

audits and reviews.

23

techniques may be used to evaluate the risks. Examples of these techniques include decision

trees, scenario planning, game theory, probabilistic analysis, and linear programming. Various

treatment alternatives to addressing risk should be considered to avoid, reduce, or transfer r

Flexibility may need to be applied regarding risk management based upon the risk categorization

of the safety software application. For a Level A safety software, all apparent risks known at the

time, whether large or small, should be identified, analyzed for impact and probability of

occurrence, prio

sa

identified, analyzed, prioritized, resolved to an acceptable level of risk, and tracked should be

determined by the safety system staff and can be graded. The safety system staff should focus o

the adverse events that would dominate the risk and assess these in a qualitative manner. The

safety system staff also has the responsibility to

ri

software is used in conjunction with the design and an

commercial contractor. The risk management work activity associated with that software is

pe ed by the service supplier. DOE controls the SQA activities of that software through

ment agreements and specifications of desig

Further guidance beyond that in NQA-1-2000 regardi

St d 16085-2004.

19

This standard provides guida

acquired, developed, operational, or maintained systems to support the ex

ris nagement processes. SQAS21.0

Guide, also discusses a risk taxonomy,

int to the safety software analyst.

5. Software Configuration Management

SCM activities identify all functions and tasks required to manage the configuration of the

software system, including software engineering items, establishing the configuration basel

to be controlled, and software configuration ch

21

of SCM should each be addressed when performing configuration m

(1) configuration identification, (2) configuration

and (4) configuration audits and reviews. This Guide extends ASM

22

configuration management tasks by including configuration

s (IEEE)

EEE,

20

ASME NQA-1-2000, op. cit., Part II, Subpart 2.7, Section 203, p. 105.

22

EE, 2003, Section 5.3.5.

19

International Organization for Standardization (ISO)/Institute of Electrical and Electronics Engineer

Std 16085, IEEE Standard for Software Engineering: Software Life Cycle Processes—Risk Management, I

2004.

21

IEEE Std 828-1998, IEEE Standard for Software Configuration Management Plans, IEEE, 1998, Section 4.3.

ASME NQA-1-2000, op. cit., Part I, Section 802, p. 16.

23

IEEE Std 7-4.3.2-2003, IEEE Standard Criteria for Digital Computers in Safety Systems of Nuclear Power

Generating Stations, IE

22 DOE G 414.1-4

6-17-05

of each

nd configuration reviews and audits.

are

nted

udits or reviews should be conducted to verify that the software product is consistent with the

Level A or Lever B, all four areas of SCM noted

a ap re graded as Level A or Level B and all Level C

ion

as

re are a

variety of approaches for software procurement and supplier management based upon—

•

service being procured and

The methods used to control, uniquely identify, describe, and document the configuration

version or update of software and its related documentation should be documented. This

documentation may be included in a SCM plan or its equivalent. Such documentation should

include criteria for configuration identification, change control, configuration status accounting,

a

During operations, authorized users lists can be implemented to ensure that the software use is

limited to those persons trained and authorized to use the software. Authorized users lists

access control specifications that are addressed in Section 5.2.5, Software Requirements

Identification and Management.

A baseline labeling system should be implemented that uniquely identifies each configuration

item, identifies changes to configuration items by revision, and provides the ability to uniquely

identify each configuration. This baseline labeling system is used throughout the life of the

software development and operation.

Proposed changes to the software should be documented, evaluated, and approved for release.

Only approved changes should be made to the software that has been baselined. Software

verification activities should be performed for the change to ensure the change was impleme

correctly. This verification should also include any changes to the software documentation.

A

configuration item descriptions in the requirements and that the software, including all

documentation, being delivered is complete. Physical configuration audits and functional

configuration audits are examples of audits or reviews that should be performed.

24

SCM work

activities should be applied beginning at the point of DOE’s or its contractor’s control of the

software.

For custom developed safety software graded at

bove ply. For all other types of safety softwa

safety software, this work activity may be graded by the optional performance of configurat

audits and reviews.

5.2.4

Procurement and Supplier Management

Most software projects will have procurement activities that require interactions with suppliers

regardless of whether the software is Level A, B, or C. Procurement activities may be as basic

the purchase of compilers or other development tools for custom developed software or as

complicated as procuring a complete safety system software control system. Thus, the

the level of control DOE or its contractors have on the quality of the software or software

• the complexity of the software.

24

Institute of Electrical and Electronics Engineers (IEEE) 1042-1987, IEEE Guide to Software Configuration

Management, IEEE, 1987, Section 3.3.4.

DOE G 414.1-4 23

6-17-05

rocurement documentation should include the technical

25

and quality

26

requirements for the

g and validating the software, including any

documentation to be delivered;

n

e

e supplier,

on the complexity of the software and its importance to safety.

e

em requirements should be translated into requirements specific for the

s re be documented in system level requirements

docume ocurement contracts, and/or other acquired

P

safety software. Some of the specifications that should be included are—

• specifications for the software features, including requirements for safety, security,

functions, and performance;

• process steps used in developin

• requirements for supplier notification of defects, new releases, or other issues

27

that

impact the operation; and

• mechanisms for the users of the software to report defects and request assistance i

operating the software.

These requirements should be assessed for completeness and to ensure the quality of the softwar

being purchased. There are four major approaches for this assessment:

• performing an assessment of th

• requiring the supplier to provide a self-declaration that the safety software meets the

intended quality,

• accepting the safety software based upon key characteristics (e.g., large user base), and

verifying the supplier has obtained a certification or accreditation of the software product •

quality or software quality program from a third party (e.g., the International

Organization for Standardization, Underwriters Laboratories, and Software Engineering

Institute).

It is important to note that while Levels A, B, and C software applications are required to fully

meet this work activity, the implementation detail and assessment method of the supplier can

vary based

5.2.5

Software Requirements Identification and Management

Safety system requirements provide the foundation for the requirements to be implemented in th

software. These syst

oftwa . The identified software requirements may

nts, software requirements specifications, pr

software agreements. These requirements should identify functional; performance; security,

including user access control; interface and safety requirements; and installation considerations

and design constraints where appropriate. The requirements should be complete, correct,

consistent, clear, verifiable, and feasible.

28

25

NQA-1-2000, op. cit., Part I, Req

NQA-1-2000, op. cit., Part I, Requir

ASME uirement 4, Section 202, p. 18.

26

ASME ement 4, Section 100, p.18.

27

ASME NQA-1-2000, op. cit., Part II, Subpart 2.7, Section 301, p. 105.

ware

28

Institute of Electrical and Electronics Engineers (IEEE) Std 830-1998, IEEE Recommended Practice for Soft

Requirements Specifications, IEEE, 1998, Section 4.3.

24 DOE G 414.1-4

6-17-05

an important aspect to ensuring only authorized users

can operate the system or use the software for design or analysis tasks. Controlling access is a

fications as part of the

29

Once th been defined and documented, they should be managed to

nimi ies to ensure

the corr to operations. Software requirements should be traceable

This work activity has no grading associated with its performance. Software requirements

ns

and sho rement. However, the detail and format of the safety software

requirements may vary with the software type. Custom developed software most likely will